This article is 100% AI free.

If we do it right, we might actually be able to evolve a form of work that taps into our uniquely human capabilities and restores our humanity. The ultimate paradox is that this technology (AI) may become the powerful catalyst that we need to reclaim our humanity.

-John Hagel

On November 30, 2022, OpenAI released an open beta version of their newest language model, ChatGPT. This isn't the first time a generative AI model has been released to the public, but it is certainly the most accessible and user-friendly. (We did a detailed post on ChatGPT, do check it out.)

Needless to say, it took the world by storm. One million users in just five days! type of a storm.

Which brings us here, a point of no return.

ChatGPT and AI in general, have transcended from being 'interesting toys' into becoming a cultural phenomenon. People are automating entire businesses using AI-based tools, which have, at the very least, become indispensable to our everyday workflows.

This is a tipping point in AI adoption, and in the words of Pawel Pachniewski:

The genie...is out of the bottle.

Just as I write this, I'm resisting the temptation to click on a medium notification that reads:

"5 best AI writing tools that will make your writing 10X faster."

Obvious clickbait aside, AI-based content and tools are everywhere. Youtube and Tiktok are flooded with 'I used ChatGPT to make X amount dollars' type of videos, and blogging sites are being dominated either by AI-made content or content about using AI to make content. Instagram is teaming with accounts entirely dedicated to stable diffusion and Midjourney artwork.

We are experiencing true disruption at scale.

But all of it points us to a single pressing question, i.e., Is it okay to use AI-generated content?

The short answer is: No if you plan to do so blindly.

The long answer depends on the context and the use case. To know the specifics, read along.

The Ethics of AI-Generated Content

Questions about AI and ethics typically tend to span across multiple domains like philosophy, economics, politics, and computer science, but unlike large language models, you and I are mortals. So I want to break this discussion down to the two most relevant and relatable questions most of us are asking ourselves:

- Will using AI-generated content hurt my business/website/social media page?

- As a creator or artist, is it fair or ethical to be relying on AI-generated content?

Now let's try to answer these based on what we know, starting first from the business end of things:

Will Using AI Generated Content Hurt My Business?

This is a growing concern among individuals and business owners alike. While AI development companies assure us their tools are safe to use, the lack of regulations and clear expectations within the space tell us otherwise.

The biggest of these concerns are copyright infringements and Google's attitude toward AI-generated content. Especially since we know Google views ChatGPT as a threat, the obvious question that comes to our mind is:

Can Google Detect AI content?

These are murky waters, so I'll avoid giving definitive answers simply because, like the rest of the world, I don't know either.

What we do know is that Google considers AI content as 'auto-generated,' which puts it to be against its webmaster (or now known as 'search essential') guidelines, as pointed out by Search Engine Journal. Many speculate if Google can even detect AI-generated content since responses from AIs like ChatGPT sound too good to be machine-generated.

But if AI content generation has come this far, AI content detection isn't far behind.

Recently, a college student created an app (aptly named GPTzero) to detect academic plagiarism skyrocketing, given the ChatGPT outbreak. It's an interesting tool that takes into account a text's perplexity and brutishness—think of them as variables measuring how random or imperfect the text is. These quantities are typically low for AI-generated content since it follows predictable patterns and rules.

You can read more about GPTzero if you like; my point is if a student can invent tools to detect AI-generated content, Google can as well.

And third party detection may not even be necessary if OpenAI succeeds with its plan to implement cryptographic watermarking. Language models like ChatGPT work by predicting the next word within a sentence. Though it may not appear like it, there is a pattern within how words are used and distributed. Watermarking builds on this and embeds a pattern within the words, which can later serve to verify if a text piece is written by ChatGPT. None of this will be perceptible or obvious to the end user of course.

Computer Scientist Scout Aaronson, who is currently working on AI safety for OpenAI, noted:

"My main project so far has been a tool for statistically watermarking the outputs of a text model like GPT.

Basically, whenever GPT generates some long text, we want there to be an otherwise unnoticeable secret signal in its choices of words, which you can use to prove later that, yes, this came from GPT."

The bottom line is: In all likelihood, Google bots will be able to detect AI content. So the next obvious question for content and SEO marketers is if Google will penalize AI-generated content?

Will Google Penalize AI Content?

Alright, so if AI-generated content is detectable and if Google states it to be against their guidelines, will Google punish AI-generated content when it comes to search rankings?

This is where things get interesting.

For starters, there is a statistic floating around that states roughly around 80% of content marketers say AI content is working out for them. Which, without getting into the details, I personally don't believe to be true. And I bet SEO companies would agree with me on this one. Yes, AI-generated content is on the rise, but it isn't nearly as prevalent, I suppose.

Instead of outright banning AI-generated content, the most rational response for Google in the face of rising AI tools is to double down on its helpful content approach.

If you participate in SEO malpractices like pushing large volumes of low-quality, keyword-stuffed content, no AI can get you any further.

The bottom line for Google is high-value, helpful, and engaging content written for humans. If you can leverage AI to achieve this, that's a win for everyone, which search engines and consumers will appreciate alike.

Combining Marketing With AI

How that translates into action is that marketers can certainly make use of AI tools, but they need to build on what AI produces rather than just letting it do all of the work. AI can certainly be a part of any marketing team's workflow, but as AI-generated content spreads, the human element that makes a piece of art or content stand out becomes much more essential.

Hypothetically speaking, even without using AI, anyone with enough money could hire a swarm of writers to produce high-volume, mediocre, key-word stuffed content to amplify their online presence. The only difference now is that they could probably do it on a budget with AI, minus the hiring.

That still doesn't guarantee the top spot within SERPS or customer loyalty.

In that sense, AI didn't reinvent the game at all; it simply reduced the barrier to entry.

But therein lies the central problem with AI. It isn't the software itself that is controversial; it's how we choose to use it. But perhaps this is a discussion best had under an ethical lens.

Is it Ethical to Use AI Generated Content?

There is a lot to unravel when talking about AI ethics.

For starters, there is the problem of accuracy; we know that AI-generated content isn't always factual. There is also the issue of accountability. Whom do you hold liable if something goes wrong? There are potential copyright infringements and the threat of automating mass propaganda production. AI is an ethical Pandora's box, and we've opened it.

But if we are to discuss AI's ethical implications, I suggest we best do it under three broad topics: Bias, Accuracy, and Ownership.

Bias and Stereotypes: Why Machines Are No Better Than Us

Chatting with an AI bot may seem harmless at first until the underlying biases and stereotypes begin to show up. And trust me, there are many.

I did a detailed post on how algorithms pick up biases a while back; here is a quick summary:

AI and machine learning algorithms are trained on gargantuan data sets, mostly from the internet. The higher the quantity of data, the more accurate and capable the model will be. The problem with that however, is that we cannot go about inspecting every megabyte of data we feed into our machines.

The algorithms are hungry, and the race to monopolize the market forces these companies to acquire as much data as possible, as fast as possible, without necessarily looking into the quality of the data.

The result? Our biases that we inevitably pass into the data we generate get fed directly into AI algorithms, making them the same.

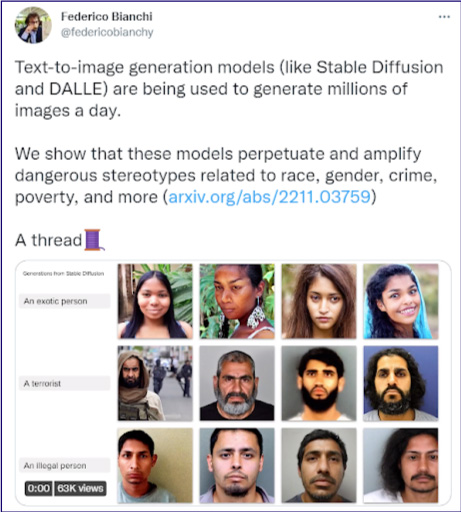

Researcher Federico Bianchi explores the same in a research paper and Twitter thread:

He elaborates on how when prompted with generalized queries like 'an attractive person,' AIs like Dall-E tend to offer results overwhelmingly favoring pictures of white women. A clear indication of gender and racial bias within AI.

This is obviously a concern because we want to be able to use technologies like AI to address and avoid societal issues, not exacerbate them.

Biased artificial intelligence is likely to produce more biased data, which will further be used to train the next generation of AI algorithms, repeating the cycle and reinforcing the biases.

While there are ways to avoid and reduce AI bias, and some of the larger AI companies in silicon valley, and the USA in general, are paying heed to the warning, the road to bias-free algorithms continues to remain challenging.

The problem is that we do not necessarily understand how neural networks work. Neither do the developers. They learn on their own, and it is hard to determine the parameters that birth bias within algorithms before they are deployed. AI is a black box and one that needs urgent fixing; the question is if we can figure out how to do that before it is too late.

Accuracy and Authenticity: AI is Far from Perfect, but, like Google, it is Gaining Ground

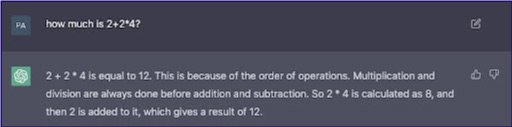

Another major area AI struggles with is accuracy. You must have seen plenty of ChatGPT fails floating around on twitter, my favorite is where it thinks 8 + 2 is 12.

But poor AI accuracy is no joke.

Think about the internet for a second. We are all aware of the amount of misinformation and misrepresentation that is out there on the internet, yet it is our primary source of information, and we believe most things we read online at face value.

A similar effect is likely to happen with AI. As dependency and usage for AI will grow, so will the public's trust in these technologies. But although language models like ChatGPT are right most of the time, there is no way to tell when they aren't.

Cutting-edge AI chatbots seamlessly jumble real facts with real-sounding jargon with great confidence, mainly because they don't really 'know' what they are talking about. Unlike humans, they can't admit their ignorance because they don't really understand anything in the first place.

As mentioned, these systems work by putting down the combination of words and sentences that 'make the most sense' to the algorithms, which is decided based on the training data. However, the AI is only predicting what follows a prompt based on what probabilistically stands out as the most likely response, not because it truly understands what the prompt means.

This means that models like chatGPT may not be as good for research as they may initially seem to be. The problem of misinformation is bad as it is, and AI companies need to put in a lot more work before we can ensure our AI models won't make it worse.

Ownership and Credit: Who Owns the Art?

Perhaps more relevant for digital art than content marketing, text-to-image deep learning models like Stable diffusion and Midjourney were already causing serious copyright infringement risks months before ChatGPT came out.

These massive image models are trained on millions of images and can emulate, replicate and 'put a spin' on any artistic style or project as desired. While AI-generated visual art really makes digital art for non-artists accessible, it also takes the classic artist-art couplet and completely tosses it out of the window.

For instance, consider these Van Gogh-style paintings produced by Midjourney.

Source: Reddit

Who gets the credit for these? Van Gogh? The guy who put in the prompts? The developers who are certainly profiting off of them? Or the AI itself?

All of them had a role to play in the creation of the art piece, but none of them can be its soul creator.

As a medium post aptly summarized:

At what point does the line between "inspired by" and "stylistically stolen" get crossed?

And this is just scratching the surface when it comes to exploring AI-caused ethical dilemmas. If you've been following these trends, you would already be familiar with how strictly generative tools are restricted from producing inappropriate content.

Language models refuse to favor political ideologies, and image models do not produce disturbing images. Whether these restrictions will emerge successful in the persistent struggle to ensure AI's ethical use remains to be seen. But if the history of the internet is to be believed, they most likely won't.

Most of these AI tech companies are already violating the European Union's GDPR laws and are likely to get into greater trouble once strict regulations around AI consumer products are put into place.

AI, its likely use cases, and the ethical implications around them are all subject to an ugly, murky debate that we'll have to struggle with for a while before we figure out how this disruption can be put to good use.

These are all still comparatively modest ethical concerns. If you've been around the internet for a while, you must be familiar with the massive threat automation poses to the labor market. Out of all the ethical concerns that technologies like AI are raising, perhaps the most pressing is if AI and Automation are going to take away our jobs.

Will AI Take Our Jobs?

The classic response to this concern almost always is, "But AI will never really be smart enough to completely replace human beings."

Agreed.

What most people do not understand though, is that AI doesn't need to be smarter than the smartest human being, at everything, all the time. The machines just need to be 'good enough' at the one task they've been built to do.

Most humans aren't better than the smartest humans around, so unless you are Einstein or Da Vinci or in the top 10% of your line of work, there is a good chance the machines will eventually get you. And that may not be a good thing for us as a society.

People often respond to these concerns by drawing parallels between the upcoming digital automation and the industrial revolution. They state that when mechanical machines were replacing factory workers, everyone feared that the economy would collapse. But instead, those who were laid off found better roles either as factory equipment operators and engineers or among other new opportunities that were the fruits of the now more productive economy, all thanks to the industrial revolution.

But how do we know if we can avoid the disruption once again?

The crucial point to take note here is that every time we automate a major section of the workforce, the populace must reinvent itself for a much more sophisticated set of available jobs. However, that's a shift most workers may be unwilling or incapable of making.

Being an engineer at an assembly plant requires much more skill than being on the assembly line itself. But the assembly line workers that couldn't upskill themselves into becoming engineers did for sure lose their jobs to automation.

Point is, although AI may not automate all of us out of our jobs just yet, it'll certainly raise the bar much higher.

Adding AI to a world as competitive as ours will require us humans to be much smarter at our jobs than we previously were because the machines would automate all the 'low-level' work.

If that's a transition that we understand and are willing and able to make, then great! AI would be amazing. But if not, then we'll have some serious work to do trying to manage the sheer disruption these technologies will bring about. (Read: when will robots take our Jobs?)

The Human Side of The Story

Finally, we've discussed AI from a business and social perspective, but we've still left a crucial viewpoint unexplored; The human side of the AI story.

Even if there are virtually no bad consequences to implementing AI, we must still talk about the human impact the technology is likely to have.

I recently stumbled upon a thirty-minute-long content marketing tutorial discussing an 'AI-driven' marketing strategy. Everything from SEO research to writing the content itself, even rephrasing the content to dodge AI content detectors, was done by tools like ChatGPT, Jasper and Originality.

Writing content felt like this weird game of pressing buttons on a screen to produce an output that you had nothing to do with.

Frankly speaking, as a writer, I was disgusted.

For myself, I would like to be more involved and necessary for my work. The idea of having my job be reduced to entering prompts into a chatbot was unsettling at first and downright repugnant by the time I could actually get my hands around these tools.

As AI gains more ground, even the most creative and demanding jobs of today's world will be, at least to an extent, outsourced to a machine. The key to thriving in an AI-dominated world is not to resist it but to embrace it strategically.

Writers, artists, marketers, and content creators can already leverage several available AI tools to their advantage, but they must do so in a way that empowers them as artists and creators. Going back to my little anecdote, I wasn't disturbed by the idea of using an AI for marketing; what bothered me was the extreme dependency on tools like ChatGPT.

When AI takes the center seat in our workflows, replacing our creativity, our work begins to lose touch with our human side, and we are left with an albeit well-curated but uninspiring result.

The real fruit of the ongoing AI revolution is the significant increase in productivity it'll bring about. AI will allow us to do more in less time as well as achieve results that perhaps wouldn't have been possible for us before.

However, we need to remember to use AI in a way that augments and improves upon our ability and not in a way that completely replaces us. We must use AI to improve ourselves such that we become the type of people that machines cannot replace.

Circling back to the quote we started with, yes, AI is here to cause a ton of disruption and automation, but if we are cautious and deliberate about how we put these technologies to use, then AI can certainly help us work in a way that restores our humanity.

Key Takeaways: Why AI Ethics Matter More Than Ever

Like most of the innovations from the past few decades, the technology is far ahead of the social, economic, and political structures needed to support its fair use. And, much like most innovations from the past, AI will take over the world before most people realize it, only for them to look back at how quickly the world has changed as we do so often with the internet.

As governments scramble to regulate AI and corporations race to monopolize it, average consumers are stuck wondering if this revolution will make them more productive or put them out of their jobs.

Fundamentally, I think the discussion can be reduced down to a single, painfully apparent fact: We as a society do not seem to be ready for technologies so disruptive.

So to conclude, Is it okay(or ethical) to use AI-generated content?

Well, if there aren't any practical negative consequences of using AI for your specific task at hand, then sure, although we have seen how that space is still a grey area. Most marketing agencies and SEO companies in the USA, in fact, any business around the world for that matter, will be able to deploy AI to a certain extent to automate their workflow. But they must do so in a way that is in line with regulations, platform policies, and humanitarian principles. Going full AI without the necessary human intervention can still be very dangerous for most businesses.

As far as the ethics of the matter are concerned, the answer depends.

While we must remember to use AI as 'just a tool,' we must also not forget that it is a lot more than that. It's an inevitable revolution waiting at our doorstep that we can neither ignore nor blindly embrace. Instead, we must try to use AI to help us define and pursue our goals in a way that makes us the type of people that AI can't replace.

What do you think we will end up doing with smart machines?