"The Future Is Offline: On-Device Intelligence Revolution with Apple’s Core ML and Google’s ML Kit Are Powering the Next Generation of Smart Apps”

Key Takeaways: On-Device Intelligence

- Key driving factors for the growth of on-device intelligence are: the growing demand for real-time data processing, user-privacy, and low-latency

- AI-based chip innovation, and vertical integration will be the top-priority to support on-device intelligence

- On-device intelligence can disrupt the traditional cloud-centric AI models.

- Core ML leverages Apple's ecosystem for seamless iOS integration and ML Kit excels in Android with NNAPI support

- Core ML and ML kit process data entirely on-device, ensuring users with enhanced security and regulatory compliance

- iOS and Android Apps will remain intelligent even without internet, enabling features in remote areas or unreliable connections

- Developers use tools like tensorFlow Lite, PyTorch Mobile and Apple Core ML to build future-focused apps

- Apple’s tools like Xcode, TestFlight; and Google’s Firebase enable app model updates and A/B testing

- On-device AI escalates security, privacy, speed, and offline capability without relying on cloud systems

- Google’s Private AI processes with on-device-level privacy

The rise of AI adoption across devices has been seen as a major architectural transformation. From simple communications tools into intelligent, personalized companions, to performing tasks using virtual assistants such as Siri, Google Assistant, and Alexa, AI algorithms are also used in revolutionizing photography on smartphones, real-time language translations, health/fitness monitoring, smart battery management and much more.

This shift, driven by powerful on-device machine learning (ML) processing capabilities, is fundamentally now changing how users interact with their devices and enabling innovation as app users demand faster, more secure, and highly responsive experiences that work no matter where they are. Running AI models directly on smartphones, tablets, or wearables is seamlessly possible with the top app development software solutions and platform-specific tools such as Apple's Foundation Models (Core ML) and Google's ML Kit.

As per the latest research report the global machine learning market size was valued at USD 55.80 billion in 2024 and is anticipated to reach USD 282.13 billion by 2030, growing at a CAGR of 30.4% from 2025 to 2030. Mobile app development software aids in creating core ML and ML Kit apps by offering essential tools such as streamlined integration, data management features and real-time testing capabilities. This is also a rich deal for app owners to reduce operational costs, run offline functionalities, provide strong data protection and build brand loyalty. This trend is the future of mobile app development and it will be especially crucial in 2026 and beyond.

What is On-device Intelligence?

On-device intelligence or on-device AI is a facility where artificial intelligence models are processed right on devices like a smartphone, watch, or car. On-device intelligence is not just a buzzword, it is a technology that can prove to be breaking reliance on cloud servers. Normally the data on your device is sent for processing on cloud servers like the ones operated by Amazon, Microsoft and Google.

With on-device intelligence, the data processing of artificial intelligence models is done directly on the devices like smartphones, laptops, even wearables. This process essentially eliminates the cloud servers from the equation. With enhanced security, privacy, speed, and offline capability, on-device AI can be accessed without any cloud servers.

“The biggest threat to a data center is if the intelligence can be packed locally on a chip that’s running on the device, and then there’s no need to inference all of it on one centralized data center.” Aravind Srinivas — CEO, Perplexity AI (as reported)

So now you are not relying on cloud servers, nor on the internet connection to analyze the data. AI algorithms use compatible hardware like the A series and M series chips and neural processing unit (NPU) in case of Apple and Google Tensor chips and the Tensor Processing Unit (TPU) in case of Google. To smoothly integrate on-device AI into future app development, developers can explore insights on key challenges and tips in the app development software market.

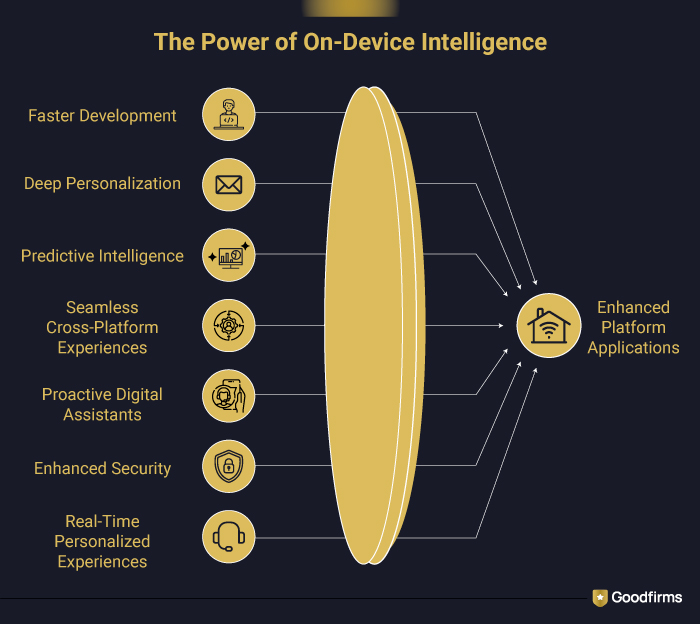

Does On-device intelligence Enhance platform-specific applications?

Yes, on-device intelligence enhances platform specific applications by processing data locally leveraging on-device models enabling applications to be faster, smarter, more responsive in providing real-time performance/low latency, enhanced data privacy/security, offline functionality, highly personalized user experience, efficient resource usage, predictive optimization and more.

-

Faster Development

Building AI pipelines is tough. Hardware dependencies are a major roadblock in this area. By going for on-device intelligence, one can eliminate this bottleneck and achieve faster development. Platforms like Apple's Core ML and Google’s ML kit have prepared the runway on which your app development can take flight. They have ready-to-use, hardware-optimized models, which eliminate the need for re-inventing the wheel in terms of AI pipelines. For Instance: it’s now possible to add ML Kit’s on-device text recognition algorithm to your Android app in a matter of minutes. This helps you to drastically reduce the development time required for integrating this functionality in your app.

-

Deep Personalization

With on-device intelligence, a high level of personalization is possible, without compromising on data security. The data never leaves the devices, and your app can understand the user habits like speech patterns, reactions, usage patterns, and health stats continuously and upgrade itself to meet the custom requirements of the user. For Instance: Apple Photos is an app that creates personalized memories of Apple device users by analyzing the images locally. On a similar note, a note taking app can analyze a user's writing style and improve its handwriting recognition.

- Predictive Intelligence:

AI can analyze app performance and user behaviour in real-time to predict potential issues or user needs. For Instance: AI can predict and preload content, optimize code for better performance, or automatically suggest relevant actions within the app.

- Seamless Cross-Platform Experiences:

It bridges the gap between different devices and platforms eliminating the need for constant cloud communication and ensuring the compatibility with different operating systems, broadening the app’s reach and enhancing user engagement. For Instance: The app would need to upload every single photo to a cloud service for analysis. This process would be slow, require internet access, raise privacy concerns, and might produce slightly different results on different platforms if the backend services differ.

- Proactive Digital Assistants:

Natural language processing (NLP) models running locally enable faster, more accurate voice commands and offline language translation, which improves accessibility and usability in various environments. For Instance: on the user’s smartphone analyzes the user’s location data via the Google Maps app or the App Maps app, the time and calendar entries.

- Enhanced Security:

On-device AI enhances data privacy by minimizing data transmission of sensitive information or content to external servers for processing. For Instance: in finance, on-device AI can analyze financial transactions securely on the user's device, reducing the risk of exposing sensitive data. This approach mitigates security risks associated with data transmission and ensures compliance with data protection regulations such as GDPR and ISO.

- Real-Time Personalized Experiences:

Mobile applications integrated with on-device AI to deliver fast response times and seamless functionality. The algorithms work as per the user’s context and environment with the data from sensors, camera and with other built-in components. It enhances real-time personalization, improves user satisfaction and engagement as applications adapt their behaviour on various user preferences. For Instance: On-device AI within a mobile shopping app provides real-time personalized product recommendations analyzing user’s speech patterns, expressions, behaviours etc.

Apple Intelligence: Apple’s Core ML Kit

Apple’s Core ML is a machine learning framework introduced by Apple in 2017. It is used by Apple products like macOS, iOS, watchOS and tvOS for performing fast prediction integrating pre-trained machine learning models into the iOS app projects.

Apple’s Core ML provides numerous features like image recognition, natural language processing, and maximizes app performance while reducing power consumption. Apple also launched Visual Intelligence, its AI-powered image analysis facility in iOS 26.

Explore What’s Possible with Apple On-Device Intelligence

Apple’s on-device intelligence aims to execute AI/ML models locally rather than relying on cloud servers. Core ML can be stated as a specific framework for Apple products that act as the engine to make the intelligence possible and efficient to fit in the apple ecosystem.

With Apple on-device intelligence it’s possible to enhance the apps data security and improve the performance by reducing server latency. It also works offline, making it reliable in any setting, processing power is limited by device hardware and no ongoing server expenses.

Core ML - Features, Tools, Security, Uniqueness

Features:

- On-Device Processing: Without internet connection, the Core ML runs models locally on the device, eliminating network latency.

- Hardware Acceleration: To maximize performance efficiently, it automatically uses the CPU, GPU, and the Apple Neural Engine (ANE). It also minimizes power consumption and memory usage.

- Broad Model Support: Core ML framework supports a wide variety of model types for tasks such as image classification, natural language processing (NLP), sound analysis, and predictive analytics.

- Integration with Apple Frameworks: Core ML model seamlessly integrates with Apple frameworks like vision for image analysis, natural language for text processing, and ARKit.

- Model Optimization: Model compression and quantization features helps to reduce model size and optimize on-device performance with no accuracy loss.

- Dynamic Model Updates: Allows developers to update models via the App Store without any need of a full app update, ensuring models remain relevant.

Tools:

- Xcode Integration: It includes built-in support for Core ML for apple platforms, which generates Swift and Objective-C interfaces automatically from imported .mlmodel files, simplifying the coding process.

- Core ML Tools (Python Package): Allows developers to convert models from popular third-party frameworks like TensorFlow, PyTorch, Keras, and ONNX into the optimized Core ML format (.mlmodel).

- Create ML: It is inbuilt with Xcode, where developers can easily build and train custom models using an easy-to-use interface, It is bundled with correct Core ML format, requiring no conversion.

- Performance Reports: Xcode provides detailed performance reports, offering insights into the support and cost of operations within a model.

Security:

- Data Privacy: Core ML processes all user data on-device, ensuring the sensitive information on device is transmitted to third-party servers.

- Model Encryption: Core ML provides built-in options for model encryption to protect proprietary machine learning intellectual property (IP) from being easily reverse-engineered or stolen.

- System Integrity: Developers can use Apple's App Attest service to verify the authenticity of their application and the integrity of the device before downloading sensitive models to add a layer of anti-fraud security.

Uniqueness:

- Apple Ecosystem Optimization: It's specifically optimized to leverage Apple's proprietary hardware (CPU, GPU, and Neural Engine in A-series and M-series chips) for maximum performance and power efficiency.

- Domain-Specific API: It provides a high-level API across all Apple operating systems to simplify the integration process

- Cloud-Optional Model: Core ML is designed to be fully functional offline, to offer services in remote areas or with consistent connectivity.

- Seamless Developer Experience: The integration with Xcode and the Core ML Tools for model conversion helps iOS developers to implement machine learning features effortlessly and without any expertise in deep data science .

Use Cases: Third-Party apps using Apple's core ML like Siri and Apple Photos

Snapchat has expanded its AI-powered features with the launch of Snap OS v5.58, which includes new Lenses, integrating the Core ML for real-time AR filters, Lenses, and generative AI features. Users can use these APIs into their Snap’s AR glasses for accessing cloud-hosted LLMs to convert 2D images and create stunning 3D effects with realistic depth.

Tinder's Photo Selector uses on-device AI with the combination of ML to simplify the process of facial recognition and photo curation from camera rolls. Tinder utilizes advanced on-device technologies like Apple’s vision framework, CoreML and TensorFlow Lite to perform such operations locally on the user’s smartphones.

Spotify’s AI DJ utilizes machine learning algorithms to transform how we consume and interact with audio content. It helps the users to enhance our listening experience by offering a personalized soundtrack after analyzing vast datasets and cross-referencing them with their individual preferences including time of day and the current mood.

It uses a wide range of AI and machine learning for personalizing content and enhancing visual search, including on-device capabilities. Deploying these methods of deep learning AI and ML Pinterest allows users to customize each dashboard to provide high quality, relevant content, maintain their high user engagement and retention.

Google’s ML Kit:

Built with Google’s expertise, the Google’s ML Kit is its own AI intelligence or on-device intelligence that developers can leverage to build powerful applications. In June 2020 Google deprecated two of its existing solutions that on-device APIs provided through ML Kit for Android and iOS apps.

Google’s ML Kit provides an easy interface for mobile developers in an easy-to-use package to build iOS and Android apps with more engaging, personalized, and optimized solutions to run on the device. Users can simply tap into on-device generative AI for their Android app with Gemini Nano and ML Kit's GenAI APIs.

Exploring What’s Possible with Google’s ML

Google's machine learning (ML) capabilities for developers offer a comprehensive suite of tools ranging from pre-trained APIs for quick integration to fully managed platforms. It also makes it possible for developers to build custom, large-scale AI solutions and deploy them across various platforms.

Google's ML tools cater to various expertise levels and enable developers to create intelligent applications across numerous industries leveraging ML Kit APIs for vision and natural language tasks and other features that run on-device for real-time performance and offline use.

Google ML - Features, Tools, Security, Uniqueness

Features and Tools

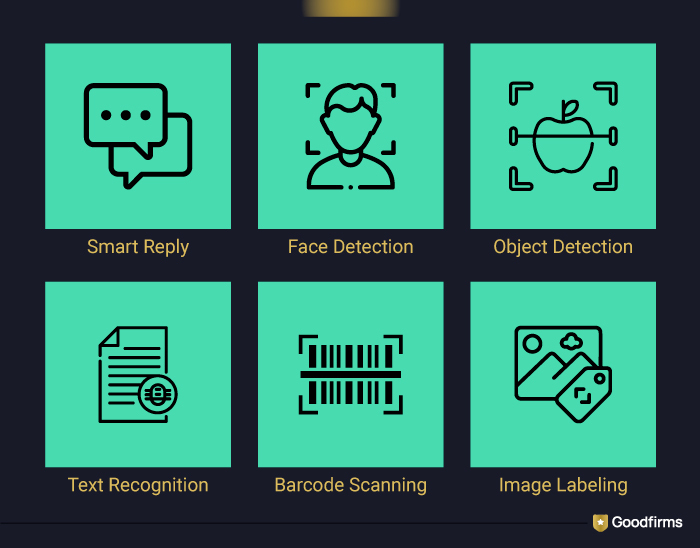

- Vision APIs: APIs for object detection, text recognition (OCR), and custom image classification.

- Barcode Scanning: It allows scanning standard 1D and 2D formats and parses structured data.

- Face Detection: Locates the faces and identifies the facial features in images or real-time video.

- Image Labeling: Identifies objects, places, and concepts in images, providing confidence scores.

- Object Detection and Tracking: Locates and tracks objects in a static image or live camera feed.

- Pose Detection (Beta): Detects and tracks body landmarks.

- Text Recognition: Recognizes text in various languages from images or documents.

- Document Scanner (Beta): Provides a UI for digitizing documents with automatic capture and editing tools.

- Natural Language APIs: Automatically determines the primary language used within a given text snippet.

- Translation: Translates text between numerous languages using the same models as the Google Translate app.

- Smart Reply: Generates reply suggestions for text conversations for casual chat only in english.

- GenAI APIs (Beta): Uses Gemini Nano for on-device summarization, proofreading, rewriting, and image description.

Security and Privacy

- On-Device Processing: On-device APIs process all input data locally and do not send data to Google servers, to mitigate the risks, enhance user privacy and security. Google also launched its Private AI Compute - secure AI-processing at on-device level.

- Data Collection for Metrics: APIs contact Google servers for detecting, debugging, maintaining models, and performance metrics, ensuring it is encrypted using HTTPs.

- Developer Responsibility: Developers must inform their users about Google's processing of ML Kit metrics data and fill out data disclosure requirements for app stores to ensure user privacy and legal compliance.

- Built-in Security: ML Kit uses Google's security infrastructure and encryption technologies like HTTPS and Transport Layer Security (TLS) that blocks online security threats and misleading ads.

Uniqueness

- Ease of Use: It provides pre-trained models and a simple API interface, making it effortless for developers to integrate features with minimal ML experience.

- Hybrid On-Device and Cloud Options: Developers can choose between on-device APIs and cloud-based APIs.

- Cross-Platform Support: The SDK offers consistent APIs for both Android and iOS platforms.

- Custom Model Support: Developers can integrate their own custom TensorFlow Lite models and dynamically deploy and update them via Firebase.

- Integration with Google Play Services and Firebase: Integration with the Google ecosystem streamlines the development and testing process.

Use Cases: Third-Party apps using Google ML kit

This calorie counter, dieting plan and nutrition tracker app uses ML kit by defining the algorithms and deployed on server to help the users to read the nutritional label in real-time leveraging the on-device text recognition and without a data connection.

.jpg)

Source:Loose It

Adidas have been using the ML kit’s object detection and tracking API to create a seamless and intuitive experience. It allows app users to detect shoes in real-time with just a click on the try it on button. The phone’s camera gets activated and using API seamlessly within seconds returns an image recognition match against hundreds of products.

Source: Adidas

This office suite software allows users to effortlessly view, edit all their documents, presentations, spreadsheets and more. This WPS uses the translation API from ML kit to provide free, instant and offline translations allowing users to instantly translate and read, write or review their documents.

Source: WPS

How are Apple’s Core ML and Google’s ML Kit Redefining App Development?

Apple’s Core ML and Google’s ML Kit are fundamentally redefining app development by allowing developers to create smarter apps, boosting innovation and fostering a new wave of intelligent iOS and Android applications by embedding sophisticated machine learning directly on-device.

Apple’s Core ML and Google’s ML Kit both platforms offer pre-built APIs and tools which can be easily integrated into the apps for iOS, supporting generative AI, transformers and real-time tasks enabling features like built-in image generation, editing, text summarization, sentiment analysis, smart replies, predict text, object detection, real time translation and much more.

It allows iOS app developers to build privacy-centric models that leverage platform-specific hardware like the Neural Engine and Tensor Processing Units for ultra-low latency inference. Core ML streamlines conversion of models from PyTorch or TensorFlow into optimized formats for iOS, supporting generative AI, transformers, and real-time tasks enabling features like image classification, natural language processing, and augmented reality, while enabling on-device training with Create ML and dynamic updates via the App Store to deliver personalized, adaptive user experiences without transmitting sensitive data externally.

What the Future of Apps Looks Like with On-Device Intelligence?

In future as the apps start completely running on-device intelligence, the AI features will instantly become very responsive with no network delays, no dependency on servers, and work fully functionally even when users are offline. On-device intelligence will not be only a feature but a strong foundation of how apps will function and interact with the users.

For developers and businesses, staying ahead in this rapidly evolving landscape means not only adopting current AI tools, frameworks and open-source app development software solutions but also preparing for a future where the boundaries between apps and intelligent assistants blur.

The most successful mobile applications will be those that harness AI to create experiences that are more personal, intuitive, and valuable than we can imagine today.

Conclusion:

As we look ahead, on-device ML or on-device intelligence will play an increasingly critical role in mobile app development. The need for faster, more reliable apps, with better privacy practices, exceptional user-experiences, and cost-effective solutions will make on-device ML more critical. Industries such as fintech, ecommerce, health tech, and more will need to integrate on-device AI to stay competitive and compliant with privacy regulations.

By adopting the combination of on-device and AI, businesses will have the future-ready apps with least reliance on cloud servers or any data centers. It can minimize latency, increase privacy, and enhance real-time decision making to build powerful applications and gain a competitive advantage in the modern app erena.