In today's digital-first economy, data is no longer just a byproduct of business operations—it's a core asset. But data, by itself, doesn't solve problems. What organizations need are scalable, secure, and accessible data products that turn raw information into actionable intelligence. At High Digital, we specialize in designing and delivering data platforms that do just that. In this article, we share the foundational principles, technologies, and lessons learned from building scalable data products across Martech, ESG, SaaS, BI, and logistics domains.

1. What is a Data Product?

A data product is more than a dashboard or report. It's a reusable, self-serve asset built with the same care and engineering discipline as a software product. It could be a platform that aggregates emissions data for ESG reporting, a real-time campaign optimization engine for a marketing team, or a BI portal serving thousands of queries daily.

Key attributes of a modern data product:

- Designed for users (internal or external)

- Built with software engineering best practices

- Versioned, tested, and monitored

- Scalable, secure, and compliant by design

- Evolves with usage patterns and organizational goals

2. Our Principles for Scalable Data Product Design

Scalability is not an afterthought. It needs to be a design principle from day one. Here are some of the principles we apply:

- Modularity: Break products into services/modules that can evolve independently

- Data Contracts: Define clear interfaces between producers and consumers

- Infrastructure as Code: Automate deployments across environments

- Observability: Monitor not just uptime but data quality and freshness

- Security-first: Bake in governance, auditability, and compliance (ISO/IEC 27001, Cyber Essentials Plus)

- Multi-tenant support: Build for future customer scaling in SaaS contexts

- Resilience: Design for failover, retry logic, and zero-downtime deployments

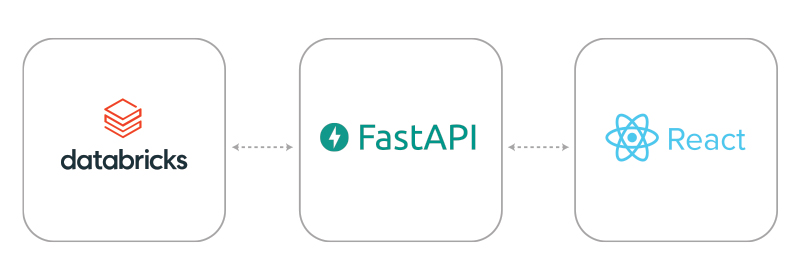

3. The Right Stack: Choosing Technologies That Scale

At High Digital, we match technologies to the problem domain, favoring those that scale reliably and integrate cleanly.

Frontend:

- React.js, Next.js, Tailwind CSS, Angular.js for a performant and responsive UI

Backend APIs:

- FastAPI and Python for high-speed asynchronous services

- Node.js for microservices and event-driven applications

- .NET for enterprise systems with legacy integrations

Data & Storage:

- Databricks: Our go-to platform for unified data analytics, ETL, and ML

- PostgreSQL and MS SQL: Trusted relational backends

- MongoDB: Document-based flexibility

Cloud & DevOps:

- Azure, AWS, and Google Cloud for infrastructure

- GitHub Actions for CI/CD pipelines

We prioritize tech that is well-supported, easily monitored, and built to handle growing workloads over time.

4. Our Partnership with Databricks: The Lakehouse Advantage

Databricks has become central to our design of scalable data pipelines. Its Lakehouse architecture allows us to combine the reliability of data warehouses with the flexibility of data lakes.

We use Databricks to:

- Unify disparate ESG and operational datasets

- Scale ML pipelines for predictive models in logistics and marketing

- Enable secure, role-based access for multi-tenant SaaS applications

- Deliver high-performance streaming and batch workloads

Our clients benefit from lower infrastructure overhead, faster time-to-insight, and native compliance support.

5. Real-World Example: ESG Reporting Platform

Challenge: A client needed a scalable platform to ingest, normalize, and report on ESG metrics from over 50 global subsidiaries.

Solution:

- Ingest pipelines built on Databricks Delta Lake

- FastAPI for secure API access

- Frontend built in Next.js + Tailwind

- Azure-hosted with GitHub Actions managing deployments

Outcome:

- Reduced reporting time by 70%

- Enabled audit-ready compliance

- Supported automated carbon and DEI metrics reporting

- Scaled from 3 internal users to 200+ client-facing analysts

6. Common Pitfalls (and How to Avoid Them)

- Starting with the wrong schema: Avoid over-engineering early models. Use flexible schemas and evolve with usage.

- No observability: Data quality issues go undetected until it's too late. Invest in monitoring.

- Ignoring DevOps: Without automated pipelines, scaling becomes a bottleneck.

- One-size-fits-all platforms: Choose tools based on needs, not trends.

- Poor stakeholder alignment: Technical success means little if product owners aren’t involved early and often.

Avoiding these pitfalls requires a balanced mix of engineering discipline, product thinking, and cross-functional collaboration.

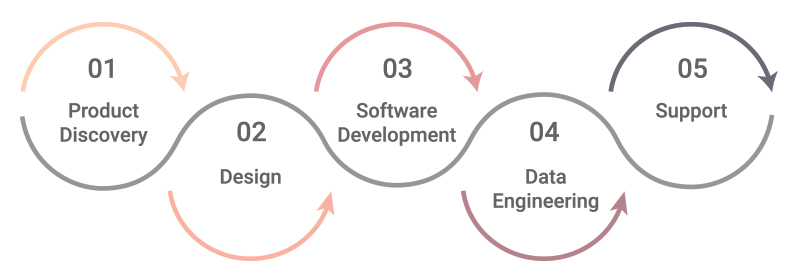

7. Agile Data Product Delivery: Our Process

We follow a collaborative, sprint-based approach that reduces risk and shortens time to value:

- Discovery: Align on goals, compliance needs, and key metrics

- Architecture: Design scalable data and app flows

- Development: APIs, pipelines, UI/UX, and infrastructure

- QA & Observability: Testing, logging, and dashboards

- Launch & Support: CI/CD + long-term monitoring

Our teams stay in sync with yours through agile ceremonies, dedicated Slack channels, and shared KPIs.

8. Beyond Build: Supporting Growth with Data Strategy

Launching a data product is just the beginning. To stay competitive, businesses need a data strategy that evolves with them.

We help our clients by:

- Setting up centralized data governance

- Planning infrastructure upgrades based on growth targets

- Offering data product audits and performance tuning

- Enabling new use cases like AI/ML or customer personalization

For scaling businesses, strategic data maturity is a competitive moat.

9. Looking Ahead: Future-Proofing Your Data Strategy

To remain competitive, businesses need to shift from ad hoc analytics to productized data thinking. This means building reusable, scalable, secure platforms that evolve over time.

At High Digital, we're helping organizations make that leap. With a stack that scales, a culture of engineering discipline, and a relentless focus on user value, we turn your data into your strongest asset.