Voice cloning isn’t just science fiction anymore—it’s real, and it’s growing fast.

But how?

With just a few minutes of recorded speech, AI can now replicate someone’s voice almost perfectly, capturing not just the words but the tone, accent, and emotion too. That’s a game-changer.

And this is the reason why AI companies in the US are leading the charge, building voice cloning tools that are being used in everything from virtual assistants and customer service bots to podcasts, audiobooks, and even healthcare. Imagine a doctor being able to give voice instructions to patients in their native language—or a brand using a familiar voice to connect more personally with customers.

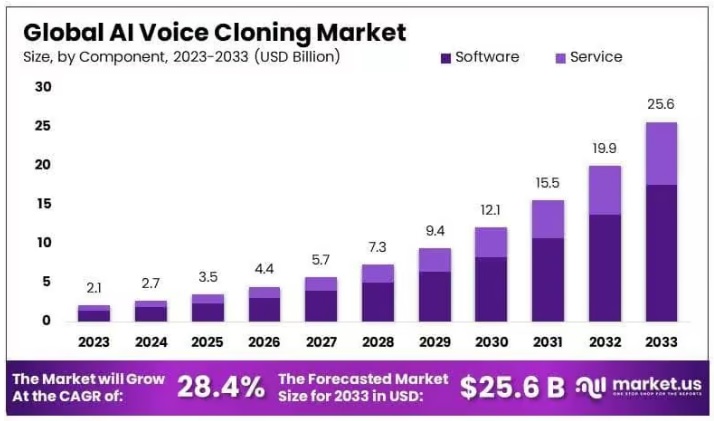

The Global AI Voice Cloning Market size is expected to be worth around USD 25.6 Billion by 2033, from USD 2.1 Billion in 2023, growing at a CAGR of 28.4% during the forecast period from 2024 to 2033.

(Source: Market.us)

(Source: Market.us)

But while the potential is exciting, there’s a flipside. The same technology that helps someone get their voice back could also be used to mimic a public figure or commit fraud. That’s why, as the tech gets better and more accessible, it’s important to talk about ethics, consent, and safeguards too.

(Source: webforum.org)

(Source: webforum.org)

Voice cloning is here, and while it’s powerful and beneficial, it’s also dangerous. The big question now is: How do we use it responsibly?

Before we discuss innovation vs. ethics and where we draw the line, let’s first understand what AI voice cloning is and how it works.

What Is AI Voice Cloning and How Does It Work?

AI voice cloning is exactly what it sounds like—technology that can copy a person’s voice so closely that it’s hard to tell the difference between the real and the synthetic. With just a short voice sample, AI can recreate not only the way someone speaks but also their tone, accent, emotion, and even their unique speech quirks.

But how does it actually work?

It all starts with machine learning. The AI is trained on audio recordings of a person’s voice, learning the patterns in how they pronounce words, pause, and inflect their tone. Advanced models, like deep neural networks, then use this data to generate speech that sounds like it’s coming straight from that person—even if they never actually said the words.

There are two main types of voice cloning:

- Text-to-Speech (TTS): You type the words, and the AI reads them in the cloned voice.

- Speech-to-Speech: You speak, and the AI transforms your voice into the cloned one in real time.

Today, AI voice cloning is being used in everything from personalized digital assistants to voiceovers, virtual characters, audiobooks, and even restoring the voices of individuals who’ve lost the ability to speak. It's fast, scalable, and surprisingly lifelike.

What is the story behind this tech? And how does this work?

Voice cloning might sound like magic, but behind the scenes, it’s powered by some pretty sophisticated machine learning models—like Tacotron 2 from Google and VALL-E from Microsoft. These models break down the human voice into data, learn its patterns, and then use that knowledge to generate speech that sounds eerily real.

Tacotron 2: Turning Text Into Realistic Speech

Tacotron 2 is a text-to-speech (TTS) model. Here’s how it works:

- Input Text: You give the model written text.

- Spectrogram Generation: Tacotron 2 converts the text into something called a mel spectrogram, which is like a visual map of the sound—showing how frequencies change over time.

- Voice Synthesis with WaveNet: The spectrogram is then passed to another model (like WaveNet) that turns this map into natural-sounding speech audio, complete with human-like tone, pacing, and inflection.

Tacotron 2 learns from hours of real human voice recordings. Over time, it starts to pick up on the speaker's rhythm, accent, and even emotional expression.

VALL-E: Few-Shot Voice Cloning at Its Best

Microsoft’s VALL-E takes things even further with zero-shot or few-shot learning—meaning it can mimic a person’s voice using just a few seconds of audio.

Here’s what makes it different:

- Training on Huge Datasets: VALL-E is trained on thousands of hours of diverse voice data. It doesn’t just learn how words sound—it learns how people speak them across different contexts and emotions.

- Discretized Audio Tokens: Instead of generating speech from scratch, VALL-E breaks down audio into discrete units or “tokens.” It then rearranges and generates new speech by predicting these tokens, similar to how language models like GPT predict words.

- Realism and Emotion: VALL-E can clone not only the voice but also the tone, emotion, and acoustic environment—making it incredibly realistic.

So, while Tacotron 2 is great for high-quality TTS, VALL-E excels at voice cloning—making it possible to recreate someone’s voice (with their permission, ideally) using just a short clip.

In simple terms, Tacotron 2 learns how to read text out loud like a human, and VALL-E learns how to talk like you using just a few seconds of your voice.

Both are examples of how machine learning (a subset of AI) is transforming speech synthesis from robotic and flat to truly human-sounding.

To get a better clarity on this subject, let’s have a look at real-life examples of AI voice cloning in commercial use.

Commercial Applications of AI Voice Cloning in the U.S. on the Rise

Here’s a detailed look at how AI voice cloning is being put to commercial use in the U.S. — offering big opportunities to companies in different sectors.

Personalized Customer Service & Call Centers

Financial services and telecoms are deploying cloned voices—sometimes of trusted brand figures or company leaders—to offer 24/7 support, reduce call times by up to 40%, and reinforce brand consistency.

(Source: Reddit)

Content Creation, Marketing & Localization

Brands use cloned voices for automated voiceovers in ads, videos, and multilingual campaigns—without re-recording sessions, saving time and money.

(Source: Adweek)

Media, Entertainment & Dubbing

Studios are cloning iconic voices for multilingual dubbing and character voicing, keeping the original actor’s tone intact.

(Source: AP News)

Interactive Virtual Assistants & Digital Experiences

Businesses integrate personalized voice clones in their apps, smart devices, and community platforms to enhance engagement and brand recall.

(Source: Furhat Robotics)

AI voice cloning in the U.S. is exploding across sectors—customer service, marketing, entertainment, healthcare, and beyond—offering scalable cost savings, personalization, and unique brand experiences.

As powerful as AI voice cloning is, it also opens the door to some serious risks—and they’re no longer theoretical. From impersonating loved ones to defrauding companies, the misuse of cloned voices is becoming one of the most alarming threats in the digital age.

The Dark Side: Identity Theft, Fraud, and Deepfake Dangers of AI Voice Cloning

AI voice cloning isn’t just a tool—it’s a responsibility. If left unchecked, it can easily shift from innovation to exploitation. Below are some different ways in which AI voice cloning is raising alarms and why it requires responsible adoption.

Impersonation and Identity Theft

One of the scariest things about voice cloning is how easily it can be used to impersonate real people. Scammers have already used cloned voices to pretend to be family members in distress, asking for urgent money transfers. Others have faked the voices of CEOs to manipulate employees into transferring funds, known as “voice phishing” or vishing.

Deepfakes Go Vocal

We’re used to seeing deepfakes as videos, but voice deepfakes are now just as convincing—and harder to detect. When audio alone is enough to fool people into thinking they're hearing a public figure, news anchor, or even a government official, the consequences can be dangerous, from spreading disinformation to manipulating elections.

Corporate and Political Threats

There have already been high-profile incidents where cloned voices of politicians were used in robocalls to mislead voters. In one case, deepfake audio of President Biden was used in a scam call, raising alarm bells about how voice cloning could be weaponized for political disruption or social engineering attacks.

Legal Grey Zones and Loopholes

Many regions still lack clear laws regulating AI-generated voices. While states like Tennessee have taken steps (with the ELVIS Act) to protect performers from unauthorized use of their voice, most people—especially private citizens—are left without clear legal recourse if their voice is cloned without consent.

Psychological Impact

Beyond fraud, there's also an emotional cost. Imagine hearing the voice of a deceased loved one used without permission or having your own voice faked in a scandalous or threatening context. The psychological toll can be immense and deeply personal.

So, now, how should this double-edged sword be handled to make the most out of its potential and at the same time, save the world from its dangers?

As AI voice cloning continues to grow, so does the need for strict ethical standards, user consent protocols, and detection tools. Many AI companies in the U.S. are now implementing watermarking, usage tracking, and access controls—but broader public awareness and regulation are still catching up.

Yet, its ethical and legal implications are being taken seriously, with emerging safeguards, laws like Tennessee’s ELVIS Act, advanced misuse-detection tools, and policies focused on user consent and transparency.

Innovation vs. Ethics: Where Do We Draw the Line?

AI voice cloning is pushing the boundaries of what technology can do—but as with any powerful innovation, it forces us to ask some tough questions: Just because we can clone someone’s voice, does it mean we should?

On one hand, voice cloning is driving massive innovation. It’s helping brands scale personalized communication, enabling people with speech loss to speak again and saving time and resources across media, customer service, and accessibility. AI companies in the U.S. are leading this charge, developing tools that are not only efficient but incredibly lifelike.

But the ethical concerns can’t be ignored.

What happens when someone’s voice is cloned without their consent? Or when it’s used to commit fraud, manipulate opinions, or create fake content that sounds alarmingly real? The line between creative innovation and harmful deception is becoming dangerously thin.

At the core of this debate is consent, transparency, and control:

- Did the person knowingly allow their voice to be used?

- Can users tell the difference between real and synthetic voices?

- Is there accountability when things go wrong?

Technology will always move faster than regulation—but that doesn’t mean ethics should be an afterthought. Whether you're an AI developer, a business, or a consumer, there’s a shared responsibility to ensure voice cloning is used to enhance trust, not erode it.

The bottom line? Innovation should not come at the cost of identity, privacy, or truth. Drawing the line isn't about limiting potential—it's about protecting people in a world where imitation is nearly indistinguishable from reality. As AI voice cloning becomes more widespread, the top AI consulting companies play a critical role in helping businesses deploy this technology ethically, ensuring transparency, regulatory compliance, and responsible use at every stage.

Precisely, AI voice cloning in the U.S. is both a technological breakthrough and a looming ethical crisis. While it offers incredible potential for creativity and accessibility, it also opens the door to identity theft and deepfake threats. The key lies in responsible innovation, clear regulations, and widespread awareness.

Conclusion: AI Voice Cloning is A Breakthrough That Demands Responsibility

AI voice cloning represents a remarkable leap forward in how we interact with technology. From hyper-personalized customer service to voice restoration for individuals who’ve lost their ability to speak, its applications are rapidly transforming industries. This isn’t science fiction—it’s already happening, driven by the rapid innovation of top speech & voice recognition companies in US are leading the way globally.

But with such powerful capabilities comes an equally urgent responsibility.

While these advancements unlock exciting new possibilities, they also raise serious concerns: identity theft, misinformation, deepfake scams, and the erosion of trust. After all, voice is more than sound—it's personal, emotional, and tied directly to our identity. Misusing it can have lasting real-world consequences.

That’s why this technology must be developed and deployed with ethics at the forefront. It’s not enough to innovate fast—we must innovate responsibly. Consent, transparency, and accountability must become core principles for everyone involved, from top speech & voice recognition companies to regulators and everyday users.

In the end, AI voice cloning is not just a technological breakthrough—it’s a test of our values. Handled wisely, it could redefine human–machine interaction for the better. Handled recklessly, it could blur the lines between reality and manipulation in ways we’re not ready for.