Imagine you are sitting in a clinic waiting for the doctor to arrive. And then suddenly, out of the blue, what do you see? A robot is striding in and sitting right across. Taken aback, you start fighting the thoughts that crowd your mind. “Oh my God, why on earth is a robot here? Where has the doctor gone? Will this robot ever be able to diagnose my condition correctly?" And so on.

Of course, AI in healthcare is a hot-button topic. While the scenario I mentioned above still exists in the realm of fiction, one cannot deny the efforts being made by AI systems to enhance patient healthcare and to make medical practitioners' lives more manageable.

In fact, at the outset of the pandemic, many AI-driven systems were used for detection and outbreak monitoring. And, even now, AI systems are being used in vaccine and treatment development too.

Here’s the thing: While one cannot deny the potential benefits of AI in the medical sector and how the healthcare world, to a greater degree, is highly dependent on it, there’s a deep-seated mistrust among the users regarding the mechanism the AI technology companies apply to run certain medical applications.

The mechanism popularly referred to as BlackBox AI Model is used in several medical applications. It's proven very effective when this mechanism is applied to low-risk tools such as patient-facing reminder apps for chronic disease management.

The issue arises in the case of complex medical apps, especially those used in areas like diagnoses and prescriptions. Your typical Blackbox AI is trained by feeding data into the algorithm and allowing it to ‘learn’ on its own. The problem with that is that we don’t know the logic behind the machine’s decisions.

That said, the black box model has accurate decision-making power given that a machine learning AI is generating the results, thereby earning it the reputation of decision-making algorithms. Notwithstanding its powerful reputation, such AI systems fail to satisfy the doctors’ need to know the model's inner workings, making it significantly difficult for them to trust the diagnosis these systems offer.

For all we know, the doctors need to know what the AI systems are up to and, more importantly, how they arrived at their inferences to treat their patients with absolute confidence. More than anything, the doctors need to explain, sometimes explicitly, to the concerned patient the disease they have been diagnosed with, with the help of AI intervention, and how they plan to go about with the treatment process.

Put another way, the doctor is expected to talk to the patients straightforwardly and with empathy.

BlackBox AI undermines this connection with the patients as they are highly mysterious. On the other hand, explainable AI comes armed with explicit explainability features that have increased its acceptability levels among the medical fraternity. It’s this inscrutableness, though considered to be accurate, that has paved the way for Explainable AI or Transparent AI.

This post attempts to shed light on various black-box issues that make the doctors slow down and realize that all is not well with AI systems and that they need to look for more refined and upgraded versions in the form of Explainable AI. First, let’s understand what Blackbox AI is actually.

What is BlackBox AI Basically

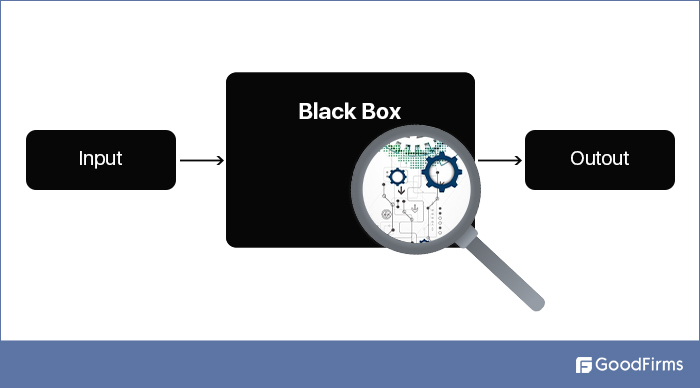

BlackBoxes are run by powerful yet complicated deep learning (DL) models that make accurate predictions. They lack transparency due to the enormous complexity of the neural connections and mathematical abstractions used. (Earlier neural networks had only 3–5 layers of connections, the current DL networks have more than 10 layers.) These deep neural networks are labeled as “black boxes”, as the models’ inner workings appear opaque to the outside observer.

In short, black-box models don't offer any explanation or interpretation as to how the AI algorithms arrive at their results. Because of this, human expectations for explicit reasoning aren’t met, making them a failure in high stakes decisions fields such as healthcare and autonomous machines, including self-driving cars, military drones, and more. Not to mention, even companies building such algorithms are bound by proprietary secrets.

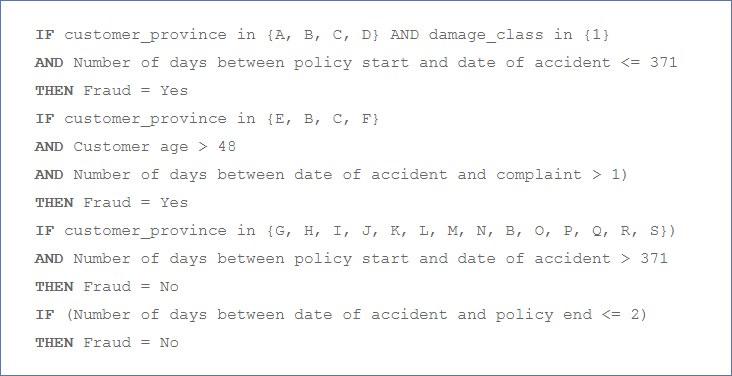

How is BlackBox AI Coded

A set of input features are fed into the model. The model then does some complex calculations to arrive at a decision. However, users and even the developer, including data scientists, are oblivious about the computation the model applies to arrive at its conclusions.

Source: Rulex.Ai

Source: Rulex.Ai

How Blackbox Model, the Impenetrable AI, has been Penetrating Our Everyday lives

Guess what! Despite its opacity, black-box AI systems have become a bread-and-butter part of our everyday existence. Please think of the typical uses of Amazon Alexa in our homes. “Alexa, play me the song of the day. ”Alexa, order Hugh Prather’s bestselling book “ Notes on How to live in this world ...from Amazon.” Alexa put on the TV. Alexa do this, Alexa do that. The list goes on and on.

As if that’s not enough. There are an array of AI applications that have inundated our lives. For instance, facial recognition apps that fire up our smartphones immediately the moment we look into it; then smartwatches that keep track of every step we take, NFC rings that help lock and unlock our door, among many others.

Turns out, the inscrutability aspect of black box AI is utterly unimportant for low-stakes decision domains, for the results legitimize the AI’s functioning. For instance, the use of AI apps to carry out the daily chores of life or low-risk tools such as patient-facing reminder apps for chronic disease management. Blackbox's impenetrability hurts deeply when human lives are at stake for high-stakes decision domains such as medical applications and autonomous machines.

I.jpg)

Here’s the bald truth: Black boxes are biased. And being an impenetrable abyss, there’s no way you could check the bias making it a risky proposition for high-stakes decision domains such as medical apps.

CIObulletin.com had alleged in 2018 that IBM’s supercomputer Watson for Oncology gave the doctors of Memorial Sloan Kettering Cancer Center unsafe procedural recommendations while treating cancer patients.

It was found that when hypothetically Watson was asked to prescribe a drug for a patient with severe bleeding, the suggested drug worsened the bleeding condition of the patient.

Okay, that’s what black boxes are all about. You still with me? Now, let’s move further and talk about how the black-box model stacks up against transparency challenges. In short, is the black box scary?

Is the black box scary?

Yes and No!

As mentioned before, black boxes work fine when used in personal space. The issue arises once the black box AI model is used in industries where human lives are at stake as black boxes’ covert functioning style doesn’t gel with the human mindset.

In the medical applications segment especially, blindly believing that an AI could get its act right every time could be construed as an act of pure foolishness. For all we know, both the doctors and patients would want to know the means adopted by the AI to reach a particular diagnosis. This lack of transparency makes AI unworkable in the high-stakes domain.

Arguments Against Blackbox AI

- Lacks quality Assurance

- Fails to evoke trust

- Limits patient-doctor dialogue

Black boxes Don’t Guarantee Quality Assurance

Errors

Technically speaking, the workings of an AI model should be overt and an open secret. Since, black-box model’s predictions are ambiguous, detecting errors in the model’s performance is simply out of the question. Random errors creep into the fabric of the AI model through patchy training data, or Trojan attacks, and so many other ways. And even if mistakes get discovered, it’s simply impossible to debug the cause of it. Consequently, when humans fail to find even simple errors, the performance of seemingly powerful models suffers.

Biased

Not just doctors and patients, even developers find it challenging to identify and correct model biases. Physician + prof @UCBerkeley Obermeyer offered a profound example of harmful AI bias in medicine. According to Obemeyer, despite their best efforts, the designers failed to check the racial bias that had crept into the algorithm: the algorithm had inadvertently used health care costs as a proxy to health care needs. The consequence: the medical application wrongly concluded that Black patients needed fewer medical supplies than white patients. It seems the AI-based its decision on the data that hinged around the historical spending power of the blacks rather than their urgent medical needs.

Since black patients are known to spend less than white patients in health care, the automated algorithm concluded that Blacks are healthier than whites. The result: if the bias were left undiscovered, less money would be spent on black patients vis-a-vis white patients.

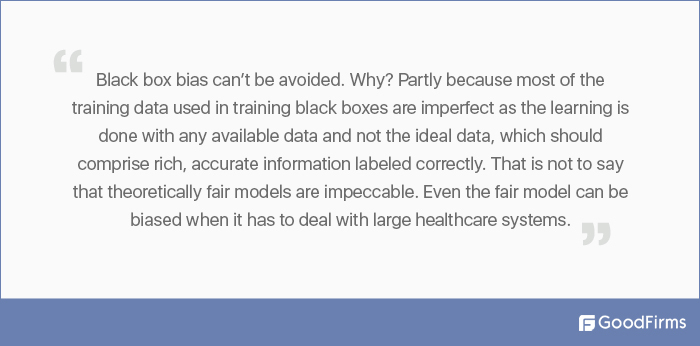

The catch: Bias can’t be avoided. Why? Partly because most of the training data used in training black boxes are imperfect as the learning is done with any available data and not the ideal data, which should comprise rich, accurate information labeled correctly. That is not to say that theoretically fair models are impeccable. Even the fair model can be biased when it has to deal with large healthcare systems.

Black boxes Don’t Evoke Trust

Uncertain Quality

Turns out: Black boxes could prove to be largely accurate and reliable in one particular setting but prove otherwise in a different setting. For instance, black boxes may work fine in lab settings but may prove inefficient in clinical settings. This dubiousness in quality could make the model commit errors that could prove fatal to patients or may not offer the expected benefits. The result: Doctors, patients, government institutions, and their likes have stopped trusting such AI tools.

Barely Interpretable

As mentioned, Black boxes are barely interpretable. Clinicians and patients need interpretable models to understand the basis on which decisions are being made. To leverage black-box AI, clinicians not only have to trust the equation applied in the model but the entire database used in it. This would be asking too much from an AI, even from a clinician’s point of view. But given that medical experts can't help in this case, for they are expected to explain their recommendations to their patients before they act on them. On top of it, they need to present their recommendations to their peers and regulating bodies too. Simply put, being able to justify their medical decisions is critical to medical practitioners, which is currently being impeded by black-box tools.

Black boxes Obstruct Doctor-Patient Relationship

Doctors Lack Enough Data

The doctor-patient relationship depends on open conversations. These dialogues are critical as medicine is a complex subject, and even experts differ in their opinions. So if the caring physician can have more profound and newer insights about the patients' condition, they could afford to have a quality dialogue with the patients. This is where black-boxes lack. Dependence on the black box could degrade the practitioners existing ethical and moral virtues.

Patients Lack Enough Data

When a model doesn’t offer adequate information and on top of it contains implied value judgments about patients' well-being, then in such cases, the model could prove dangerous for patients. E.g. If the model suggests chemotherapy to increase the longevity of a patient’s life when the patient is looking for a quality life, this could lead to difficult situations. Model incomprehensibility could make it difficult for doctors to make joint decisions with the patient and, more often, is a deterrent to the patient's autonomy.

Long story short, the medical AI sector is still ripening, so making passing judgments about using AI in healthcare is not the right thing to do currently. The best solution is that the healthcare domain should focus more on transparency in AI model design and model validation to build credibility around medical AI.

One way out, the experts suggest, is to improve the present AI to ensure that the future AI delivers on the benefits its promises: that is, transparency so that doctors and patients could trust the model. This is where Explainable AI plays a significant role.

Explainable AI - Righting the Black Box Wrongs

Explainable AI promises to enable humans to interpret algorithms that are intrinsically opaque and thereby build trust among the users. Since the data input is crystal-clear, the user knows how the model has reached its conclusion. The biggest plus: If the results are not on the expected lines, the data feed can be tailored to help the AI deliver the expected results.

Taking a Closer Look @ Explainable AI

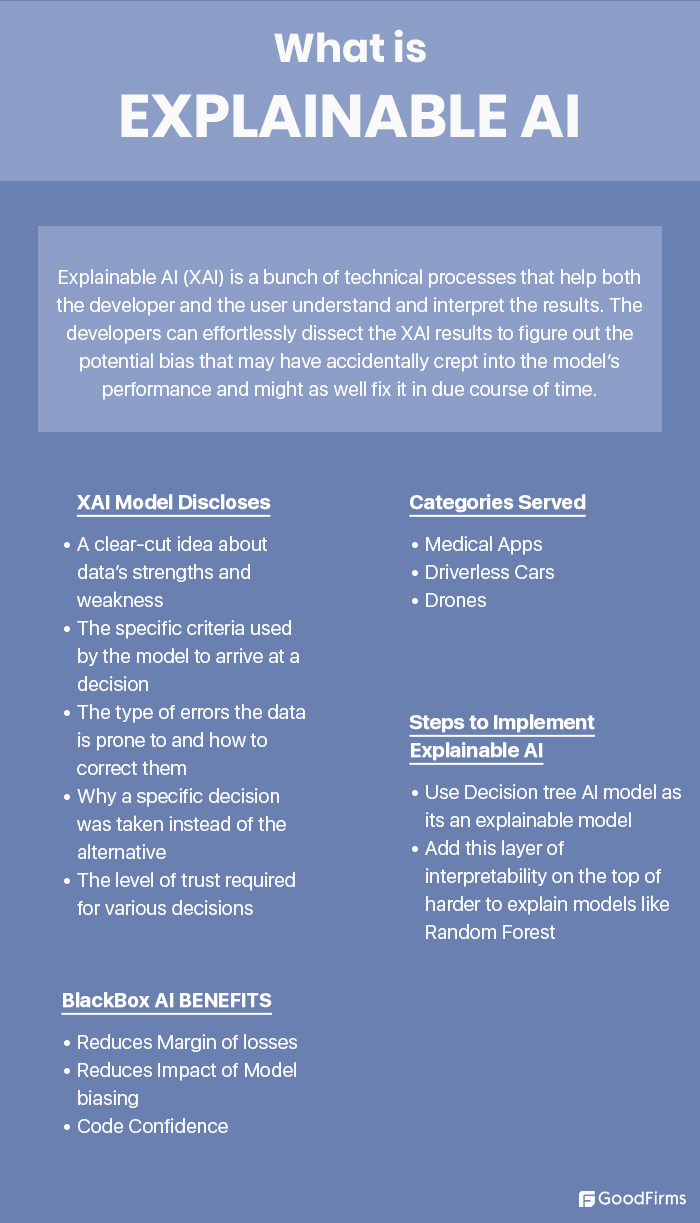

Explainable AI (XAI) is a bunch of technical processes that help both the developer and the user understand and interpret the results. The developers can effortlessly dissect the XAI results to figure out the potential bias that may have accidentally crept into the model’s performance and might as well fix it in due course of time.

On the other hand, users can start trusting the XAI’s inference results as it comes along with interpretations. The term“Code confidence” is generally used for developers and users relying on the AI model’s inferences.

XAI Model Discloses:

- A clear-cut idea about data’s strengths and weakness

- The specific criteria leveraged by the model to arrive at a decision

- The type of errors the data is prone to and how to correct them

- Why a particular decision was taken instead of the alternative

- The level of trust required for various decisions

Source: Darpa.MIL

Powered by explainable AI, new machine-learning systems will typically explain the logic, identify their strengths and weaknesses, and even give you a rough understanding of their future behavior.

Turns out: Leveraging XAI solutions would help the developer offer necessary code confidence to the users.

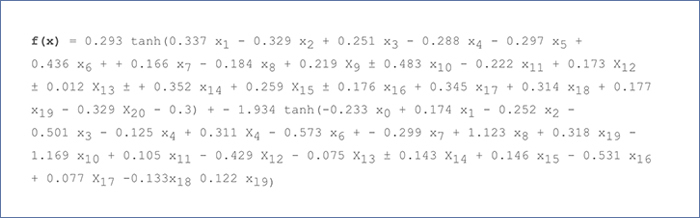

Explainable AI Leverages Transparent Models

A few interpretable structures that are already an integral part of many explainable models include linear regression, decision trees, shallow neural networks, and more. In the case of these explainable models, the input, output, and intermediate components are logically related. Not just that, all the intermediate components can be closely examined to reconstruct the reasoning process that generates the results.

Irrespective of how these interpretable structures work, efforts are being made to make deep models interpretable. To achieve this, developers might try to thin neural connections.

While making interpretable AI, the reliability and accuracy part may have to be compromised quite a bit. That said, AI systems’ close partnership with healthcare experts and patients matters the most as their valuable suggestions will help in the design of AI.

Sure enough, designing an interpretable model from scratch may seem impossible. However, post-hoc explanation methods help examine the behavior of a model once it has been created. All said and done; healthcare experts should invariably employ intrinsically interpretable models until post-hoc explanations can be theoretically justified.

Explainable AI Focuses on Clinical Validation

An accurate model doesn’t mean improved healthcare. For a medical AI to win users' trust, it should be obvious how the doctors and patients will benefit from it. This calls for clinical validation, which mainly talks about Diagnostic accuracy in a clinical setting.

A recent review found that 94% of 516 machine learning studies failed to pass even the first stage of the clinical validation test, raising questions about the potential of AI? As in whether AI systems use is being overrated.

Benefits of Explainable AI

If black boxes are the yin of AI, explainable AI systems are the yang. Though this AI is still at its infancy stage, the medical world sector has pinned greater hopes on this AI.

Reduces Margin of losses

Medicine is a susceptible field wherein a tiny margin of error could result in irreversible losses. Keeping an eye over the results will not help the developers reduce the chances of mistakes but help them reach the root of these errors to help them further improve the underlying model.

Reduces Impact of Model biasing:

AI models are inherently biased. Having an explainable AI will reduce such bias predictions while arriving at decisions. Think of Optum's healthcare algorithm that was found to be biased against high-risk Black patients. Nearly 200 million people were affected because of the biased AI approach.

Responsibility and Accountability:

AI model predictions are often prone to errors. So, it’s always an excellent idea to rope in a person who’d be responsible and accountable for the mistakes and take necessary steps to fix them.

Code Confidence

With every explanation, the system gains confidence. Medical diagnosis is a user-critical field, so a high level of code confidence ensures optimal utilization.

Explainable AI Examples

Yes, explainable AI is already working wonders in the realm of healthcare, specifically in cancer and retinopathies. In fact, the use of AI in analyses and review of mammogram and radiology images speed up the process by 30 times and with 99% accuracy.

Case study 1:

In 2017, Stanford researchers successfully trained an algorithm to diagnose skin cancer. In 2018, Google’s DeepMind technology in joint research with Moorfields Eye Hospital London trained a neural network to detect more than 50 types of eye disease. The best part of this study over its predecessors is the explainability part - of how the computer arrived at some of the interpretations by overcoming a previous black box of data interpretation and inference.

Case Study 2:

The Highmark health team and IBM’s Data Science and elite team are avoiding costly inpatient Sepsis admission by identifying high-risk patients based on insurance claims data. The U.S. faces 720,000 sepsis cases annually, with a high mortality rate between 25 – 50%. The disease also consumes more than USD 27 billion annually, making it one of the country’s most expensive inpatient conditions.

Case study 3:

In 2017, at the Maastricht University Medical Center, Netherlands, surgeons used AI-assisted robotics to suture extremely narrow blood vessels –.03 to .08 millimeters across.

Sure thing, the surgeon still had total control of the robotic suturing. Not just that. There were several little tasks performed during a surgical process that only a skilled surgeon could do. The point is, there’s still a long way to go before AI apps can achieve the level of efficiency required by medical practitioners. However, for now, they are excellent helpers that can reduce outcome variability.

The Magic Combo - Explainable AI + Physicians

AI technology is emerging as a powerful agent in the healthcare realm. But then again, Explainable AI on its own can only do so much in the medical arena. To take it further, one needs to combine the power of both AI algorithms and the power of physicians. At the International Symposium on Biomedical Imaging, a competition of computational systems was held to detect metastatic breast cancer from biopsy images. The result: The winning program made the diagnosis with a 92.5% accuracy; however, combining it with human pathologists’ opinion and expertise increased that number to 99.5% success. So it goes without saying that along with AI, the role of humans is crucial for proper and accurate diagnoses of the human condition.

Steps to Implement Explainable AI

To balance accuracy, explainability, and risk, top Deep Learning companies can do a couple of things for you:

- Use Decision tree AI model as its an explainable model

- Add the above layer of interpretability on the top of harder to explain models like Random Forest

Blackbox is the Yin, Explainable AI is the Yang

In terms of performance, explainable AI can be considered better vis-a-vis black boxes as they are pretty transparent. But then, explainable AI is not the ultimate, fool-proof, fail-safe AI model that could totally rescue the medical world from all sorts of misdiagnosis, given that it is still an emerging solution with proof-of-concept prototypes. There are still technology risks and gaps in machine learning and AI explanations for any application in question.