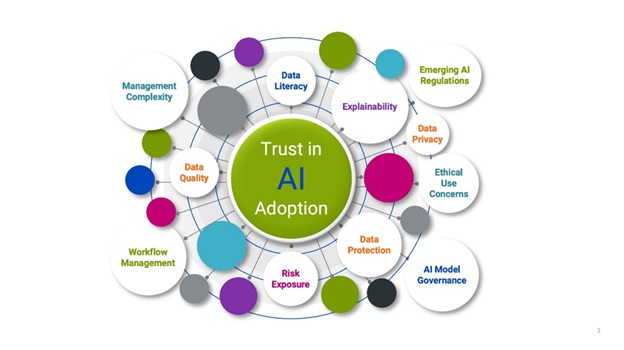

Businesses are leveraging artificial intelligence (AI) to boost efficiency, reliability, and quality. AI helps detect fraud, mitigate risks, and enhance customer satisfaction. But you need a reliable AI solution that works without flaws or bias. This is where quality assurance (QA) for AI can help.

QA for AI can ensure the foolproof quality of constantly evolving AI-driven models. It can also ensure that AI models align with business goals as they update themselves with new data. QA can enhance accuracy, cut risks, and build trust in AI-driven decisions.

This blog explores the trends, challenges, and innovations in AI testing. You will also discover key trends and best practices for QA in AI.

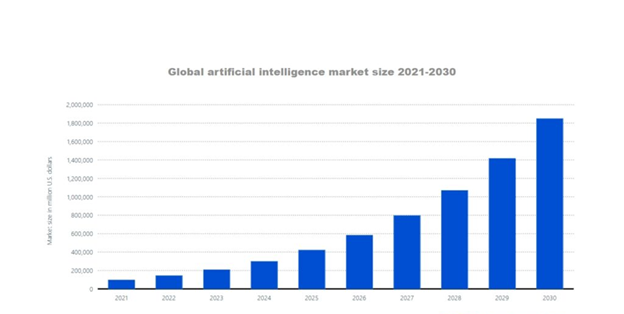

Source: Statista

Why AI is Harder to Test than Traditional Software

AI-driven software differs significantly from traditional applications. They don't typically follow predefined logic and produce consistent outputs. AI systems are dynamic and adaptive. They continuously learn from new data and keep evolving. This fundamental difference makes AI testing far more complex and challenging. Below are the key reasons why AI is harder to test than traditional software:

1. Non-deterministic behavior

Traditional software apps work on fixed rules and logic. This means the same input always produces the same output. However, AI models don’t guarantee identical outputs for the same input over time. AI models continuously update themselves based on new data, introducing unpredictability.

For example:

- A rule-based loan approval system always rejects an app that doesn’t meet specific conditions.

- An AI-powered loan approval system might approve an application today. But it can reject a similar one tomorrow due to shifts in its training data or algorithm updates.

Testing non-deterministic AI systems need continuous validation, real-time monitoring, and retraining strategies. These help to track performance changes and detect inconsistencies.

2. Data-driven complexity

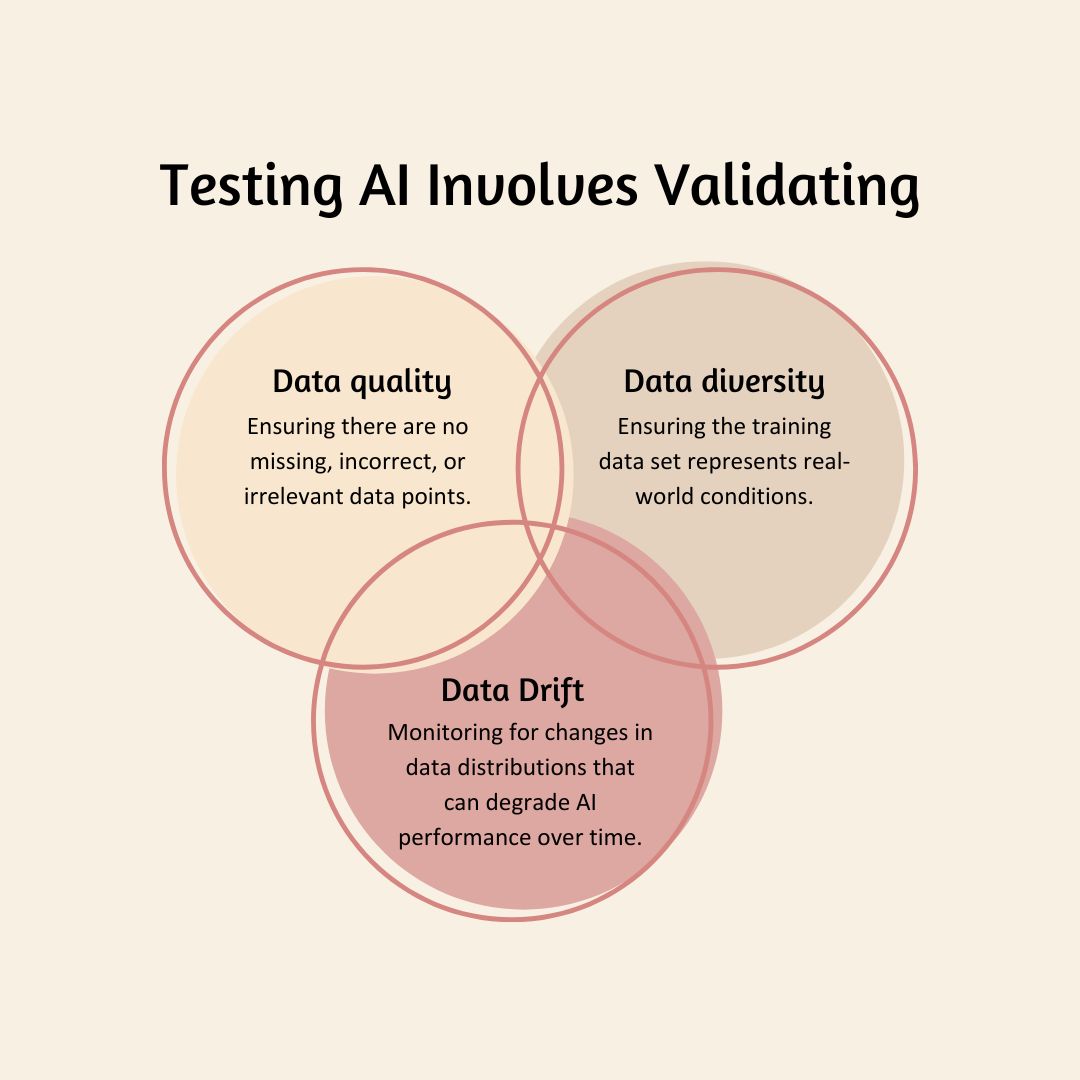

Traditional software testing focuses on code functionality. But AI models rely heavily on large, diverse datasets to make decisions. The quality, variety, and biases in these datasets directly affect AI behavior. Testing AI involves validating:

Data quality: Ensuring there are no missing, incorrect, or irrelevant data points.

Data diversity: Ensuring the training data set represents real-world conditions.

Data drift: Monitoring for changes in data distributions that can degrade AI performance over time.

AI models adjust based on the data they receive. QA teams must continuously test data integrity and model performance to prevent accuracy degradation.

3. Black-box decision-making

Many AI models, particularly deep learning networks, act as "black boxes". This means their internal logic is difficult to interpret. This makes it challenging to:

- Decode an AI system’s decision-making process.

- Debug errors and incorrect predictions.

- Explain AI-driven outcomes to regulators, stakeholders, or end users.

Here, explainability testing techniques like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) can help. These methods can help you understand AI decision-making with easily digestible insights.

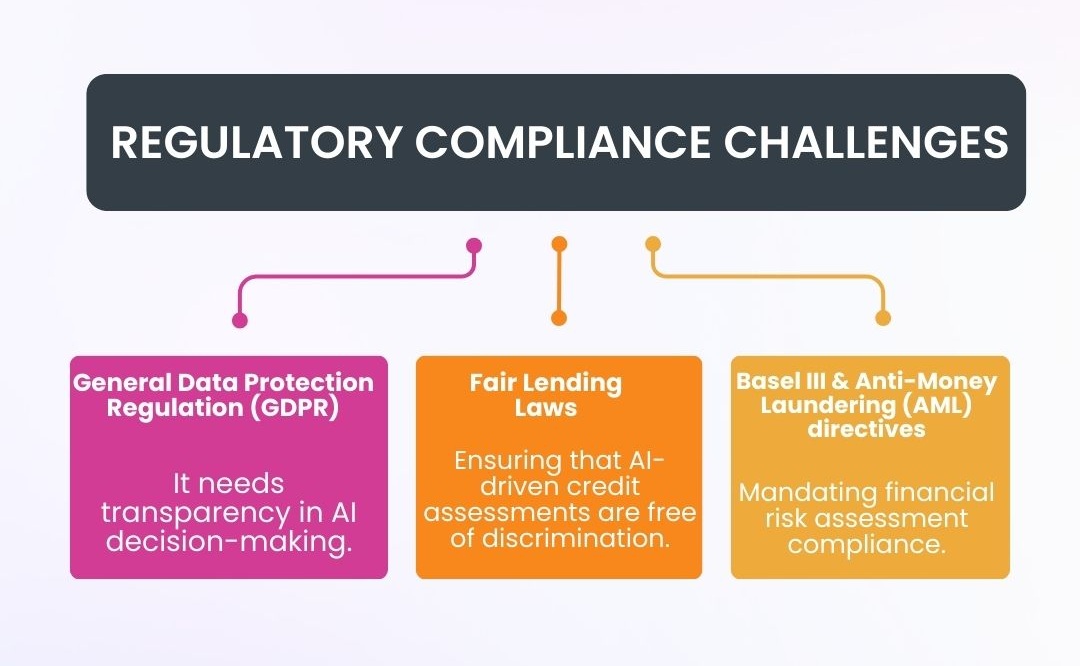

4. Regulatory compliance challenges

AI adoption in healthcare and banking & finance is governed by strict regulations. These include:

General Data Protection Regulation (GDPR): It needs transparency in AI decision-making.

Fair Lending Laws: Ensuring that AI-driven credit assessments are free of discrimination.

Basel III & Anti-Money Laundering (AML) directives: Mandating financial risk assessment compliance.

Traditional software follows static compliance rules. You must audit AI models continuously to ensure ongoing adherence to regulatory requirements. Implement automated compliance validation tools, fairness testing, and explainability frameworks. These will help you align AI models with industry laws and ethical AI standards.

5. Dynamic learning and model evolution

Traditional applications only change when developers update the code. However, AI models evolve dynamically based on new data, retraining, and environmental changes. This introduces challenges such as:

- Model drift: AI accuracy deteriorates as data patterns shift.

- Concept drift: The meaning of inputs changes over time. For e.g., consumer behavior shifts in loan approvals.

- Data poisoning: Malicious data inputs can corrupt AI training and lead to inaccurate predictions.

To reduce these issues, AI testing must include continuous monitoring, retraining strategies, and version control. It will also help you track and manage changes effectively.

Key challenges in AI quality assurance

AI systems introduce complexities that traditional software testing does not. Unlike conventional applications with fixed logic, AI models evolve over time. Below are some of the most pressing challenges QA teams face when testing AI models.

1. Unpredictable behavior

AI models do not operate with fixed logic like traditional software. They continuously adjust their decision-making based on new training data and changing input conditions. This means the same input may yield different outputs over time. Hence, it's difficult to ensure consistency and reliability.

QA teams must develop robust validation and revalidation strategies to track model performance over time. This includes testing AI systems under various scenarios, including edge cases. On top of that, you also need real-time monitoring of AI decisions to find and address anomalies. Establishing version control for AI models can also help maintain oversight of changes. It can ensure that updates do not introduce unintended consequences.

2. Bias and fairness

AI bias is one of the biggest challenges for businesses. Biased decisions can cause regulatory penalties and dent to reputation. Biased AI can happen from unbalanced training datasets and flawed data collection methods. It can also happen from hidden prejudices in algorithmic decision-making.

QA teams need to implement bias detection frameworks. They can analyze AI decision-making for disparities across different demographic groups. One method is counterfactual fairness testing. It can check whether an AI model would make the same decision if certain protected attributes were different. These attributes include race, gender, or socioeconomic status.

Diverse dataset augmentation can also train AI systems on more representative data. It can cut the risk of biased decisions. Regular fairness audits and regulatory compliance checks further ensure AI models align with ethical AI principles.

3. Explainability and transparency

AI models often function as “black boxes”. You can’t interpret their decision-making processes easily. This becomes more challenging in regulated businesses where companies must justify AI-driven decisions to:

- Regulators

- Stakeholders

- Customers

Without transparency, organizations risk legal challenges and loss of trust. To fix this, QA teams must integrate explainability testing into the AI validation process. You can use techniques like:

- SHAP (Shapley Additive Explanations)

- LIME (Local Interpretable Model-agnostic Explanations)

These methods can break down AI decision-making into understandable components.

4. Performance and scalability

AI systems manage heaps of data in real-time. Any performance degrade under high load conditions can cause customer complaints and loss of revenue. Stress and load testing with real-world data can ensure flawless performance at scale.

Latency testing measures AI response times under peak conditions to ensure system efficiency. You can also use horizontal scaling strategies like deploying AI models across distributed cloud environments to improve system resilience.

5. Security vulnerabilities

AI models are now prone to cyber threats. Adversarial attacks can manipulate AI inputs to force incorrect predictions. Hackers can feed misleading input data to exploit vulnerabilities in AI-powered fraud detection systems. Attackers are also using data poisoning to corrupt training datasets.

QA teams can simulate attacks to evaluate AI model resilience. Model hardening techniques, such as adversarial training, can help AI systems detect and defend against manipulated inputs. Implementing continuous threat monitoring can detect emerging security risks in real time. AI-driven anomaly detection systems can also find unusual patterns in data.

6. Regulatory and compliance risks

Businesses need to follow data privacy, risk management, and AI ethics. AI systems must comply with laws such as:

- GDPR (General Data Protection Regulation)

- CCPA (California Consumer Privacy Act), Basel III

Non-compliance can cause hefty fines, legal liabilities, and brand disrepute. You can have AI compliance through regulatory-driven AI testing. This includes:

- Auditing AI models for compliance with industry standards

- Conducting impact assessments to evaluate potential risks

- Maintaining comprehensive audit trails for all AI-driven decisions

Key trends in QA for AI

Traditional software testing methods are no longer sufficient to validate AI-driven applications. Here are the top trends:

1. AI-driven test automation (AI for QE)

AI-powered tools are improving software testing by:

- Automating test case generation and execution.

- Detecting defects faster than manual testing methods.

- Reducing human intervention in repetitive testing tasks.

- Enhancing test coverage using predictive analytics.

- Enabling self-healing test automation frameworks.

2. Explainability and interpretability testing

Regulatory bodies require AI decisions to be explainable. QA teams use techniques like:

- LIME (Local Interpretable Model-agnostic Explanations): Helps understand model predictions.

- SHAP (Shapley Additive Explanations): Identifies the importance of input variables.

- Counterfactual analysis: Evaluates how minor changes affect AI decisions.

3. Bias and fairness testing

To minimize AI bias, QA teams:

- Conduct fairness audits to detect discrimination in decision-making.

- Use diverse and representative datasets to train AI models.

- Develop bias-mitigation algorithms to ensure fairness.

4. Adversarial testing and security validation

AI-driven applications are vulnerable to security threats. QA teams simulate adversarial attacks to:

- Test AI model robustness against fraudulent manipulation.

- Strengthen model hardening techniques.

- Improve fraud detection accuracy.

5. Model drift detection and continuous monitoring

AI models degrade over time due to evolving data patterns. Model drift testing helps:

- Detect performance degradation early.

- Automate model retraining.

- Validate new data before it influences AI decisions.

6. Regulatory compliance testing for AI models

AI-driven applications must adhere to:

- Data privacy laws like GDPR and CCPA.

- Financial regulations like Basel III, AML directives, Fair Lending Laws.

- Auditability requirements for decision traceability.

7. Human-in-the-loop testing

While automation is essential, human testers play a crucial role in:

- Evaluating AI decisions for ethical correctness.

- Assessing edge cases, AI might miss.

- Enhancing customer satisfaction through user acceptance testing.

Innovations in AI testing services

With the growing adoption of AI, legacy software testing methods can’t address AI-powered solutions. QA teams now face issues like non-deterministic behavior, explainability, and ethical concerns. To handle these issues, you need cutting-edge AI testing services. Below are the key innovations shaping AI testing:

1. Automated AI model testing

Since AI models evolve continuously, manual testing is impractical. Automated AI testing tools streamline the validation process by:

- Generating synthetic test data: It evaluates model performance across various conditions.

- Running automated adversarial tests: It checks how AI handles unexpected inputs.

- Performing continuous integration (CI) testing: It validates AI updates in real-time.

For example, frameworks like DeepTest and AIDT (AI-driven testing) automate regression testing for AI models, ensuring stable performance across updates.

2. AI-powered test case generation

Traditional software testing relies on predefined test cases. But AI applications require dynamic and adaptive test scenarios. AI-driven test automation tools use:

- Machine learning (ML) algorithms: ML algorithms identify potential failure points.

- Fuzz testing: It can feed random, unexpected inputs to test model resilience.

- Predictive analytics: It can generate test scenarios based on real-world usage patterns.

3. Explainability and interpretability testing

AI’s "black box" nature makes it difficult to understand why it makes a particular decision. Innovations in explainability testing provide insights into AI behavior. It can help you enhance transparency and compliance.

- SHAP and LIME: SHAP and LIME help break down AI predictions into understandable terms.

- Counterfactual testing: It assesses what minimal change in input would have led to a different AI decision.

- Causal AI testing: It evaluates if AI’s reasoning aligns with human logic and fairness principles.

For regulated industries like finance and healthcare, explainability testing can meet legal requirements and build user trust.

4. Bias and fairness testing

AI models can unintentionally amplify biases present in training data. It could lead to unfair outcomes. Advanced AI testing services focus on:

- Bias detection frameworks: They scan datasets for demographic imbalances.

- Fairness-aware model retraining: It adjusts AI decisions for equitable outcomes.

- Ethical AI audits: They validate compliance with regulations like GDPR, EEOC, and Fair Lending Laws.

Tools like IBM AI Fairness 360 and Google’s What-If Tool help identify and mitigate biases.

5. Continuous monitoring and AI model observability

Unlike traditional apps, AI systems need ongoing monitoring to learn and change. Innovations in AI observability include:

- AI model drift detection: It helps identify when an AI’s accuracy declines due to shifting data patterns.

- Automated retraining pipelines: It ensures AI models stay up to date with real-world changes.

- AI logging and explainability dashboards: They track decision-making over time to detect anomalies.

For example, Fiddler AI and WhyLabs provide real-time monitoring solutions. They track AI performance, identify model degradation, and recommend corrective actions.

6. Security and adversarial testing for AI

AI models are vulnerable to cyber threats like data poisoning and adversarial attacks. Here hackers manipulate data to fool AI systems. To counteract these risks, security-driven AI testing includes:

- Adversarial robustness testing: It exposes AI to manipulated inputs to assess resilience.

- AI fuzzing: It feeds AI models random noise to detect vulnerabilities.

- Threat detection simulations: It checks AI’s response to cyber threats in real-world scenarios.

For instance, MITRE ATLAS provides adversarial AI testing frameworks to ensure models can withstand security threats.

Best practices for QA in AI

Here are the top ten best practices for QA in AI:

1. Define clear AI testing objectives

Set measurable goals for AI testing, such as accuracy, fairness, explainability, and security. This aligns testing with business goals and regulatory needs while identifying key risk areas.

2. Ensure high-quality, unbiased training data

Diverse, representative datasets minimize bias and improve AI performance. Implement data validation techniques to detect inconsistencies, duplication, or demographic imbalances that may lead to unfair AI decisions.

3. Automate AI model testing

AI-driven test automation tools can run regression, performance, and adversarial tests at scale. Continuous testing keeps AI models stable, even with regular updates or new data.

4. Validate AI explainability and transparency

Employ techniques like SHAP and LIME to interpret AI decisions. Transparent models build trust, enhance compliance, and make debugging easier when errors occur.

5. Continuously monitor AI performance

AI models degrade over time due to data drift. Set up real-time monitoring tools to detect performance drops, trigger retraining, and maintain accuracy in production.

6. Conduct fairness and bias testing

Regularly assess AI for bias using fairness-aware tools like IBM AI Fairness 360. Adjust training data and model parameters to ensure equitable outcomes across different demographics.

7. Implement robust security testing

AI is vulnerable to adversarial attacks and data poisoning. Run adversarial testing, penetration testing, and security audits to safeguard against manipulation and breaches.

8. Use version control for AI models

Track model changes with version control systems like MLflow. This enables reproducibility, rollback capabilities, and precise documentation of model updates.

9. Test AI in real-world conditions

Simulate real-world scenarios, including edge cases, unexpected inputs, and environmental variations. These edge cases can help you assess AI reliability beyond controlled lab environments.

10. Ensure compliance with regulations

Align AI testing practices with legal frameworks like GDPR, HIPAA, or industry-specific standards. Compliance audits can cut legal risks and ensure ethical AI deployment.