DataRoot Labs

AI Solutions for Startups and Enterprises

DataRoot Labs is an award-winning company that builds and implements Artificial Intelligence, Machine Learning, and Data Engineering systems across industry verticals to help our clients build and launch AI-powered products and services.

Since 2016, we have specialized exclusively in AI development and consulting, building deep in-house expertise in Generative AI, Conversational AI, Natural Language Processing, Computer Vision, Machine Learning, Reinforcement Learning, Deep Learning, Edge ML, and other related areas. We are a US company with a global client base, leveraging the math and science excellence of Ukrainian engineers.

As a way to give back to the community, we run DataRoot University — a free, proprietary online school focused on machine learning and data engineering. Since 2018, over 6,000 students have enrolled, giving us access to a vibrant AI community and helping us attract and develop top talent for our team and the broader market.

United States

United States

Service Focus

Industry Focus

- Automotive - 10%

- Art, Entertainment & Music - 10%

- Financial & Payments - 10%

- Healthcare & Medical - 10%

- Hospitality - 10%

- Manufacturing - 10%

- Media - 10%

- Information Technology - 5%

- Real Estate - 5%

- Transportation & Logistics - 5%

- Retail - 5%

- Other Industries - 5%

- E-commerce - 5%

Client Focus

Detailed Reviews of DataRoot Labs

Client Portfolio of DataRoot Labs

Project Industry

- Transportation & Logistics - 8.3%

- Business Services - 8.3%

- Manufacturing - 8.3%

- Healthcare & Medical - 8.3%

- Information Technology - 8.3%

- Automotive - 8.3%

- Gaming - 8.3%

- Education - 16.7%

- Food & Beverages - 8.3%

- Advertising & Marketing - 8.3%

- Retail - 8.3%

Major Industry Focus

Project Cost

- Not Disclosed - 75.0%

- $10001 to $50000 - 16.7%

- $0 to $10000 - 8.3%

Common Project Cost

Project Timeline

- 1 to 25 Weeks - 91.7%

- 51 to 100 Weeks - 8.3%

Project Timeline

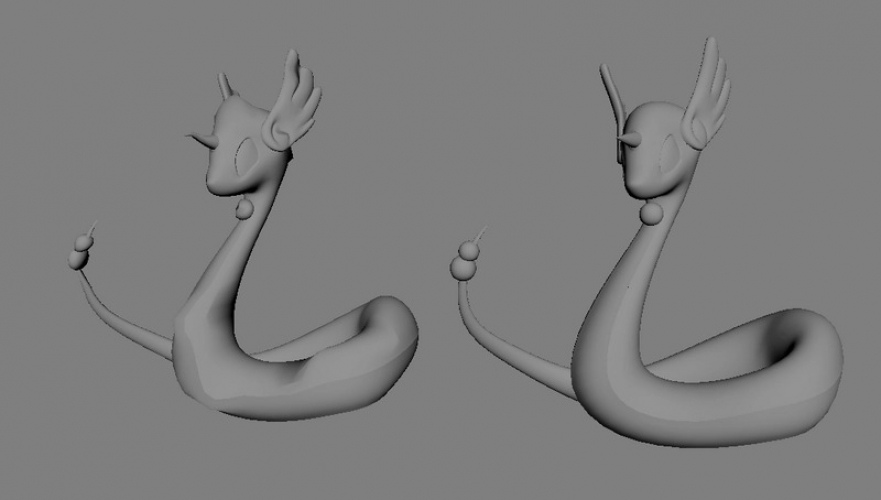

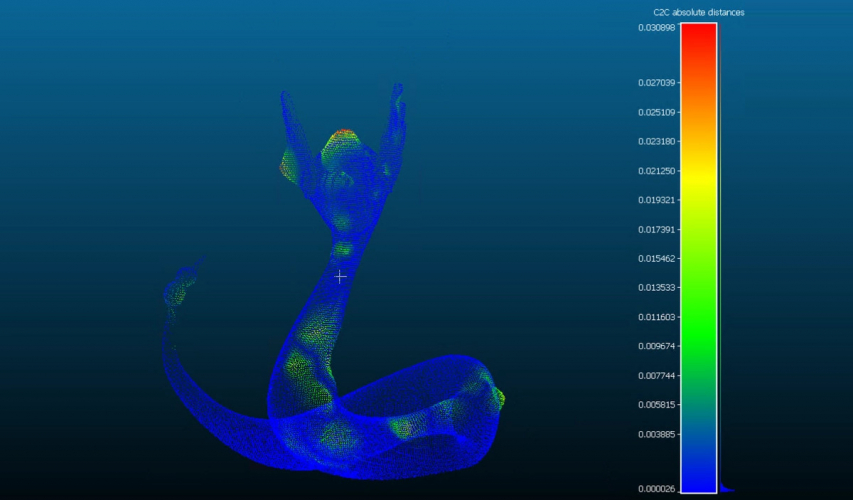

Portfolios: 12

.jpg)

.jpg)