AI adoption in the U.S. Healthcare system is underway, but progress is slow.

According to a survey by Moneypenny, of the 750 U.S. businesses, 66% of healthcare companies are using or planning to use AI. Most companies adopt AI to increase marketing, decision-making, and productivity efficiency.

That said, the technology’s adoption in the U.S. healthcare system is slow because of diverse factors, including distrust, a lack of technology understanding, a complex regulatory landscape, and a lack of clear government guidelines and risk mitigation standards.

To navigate these challenges, healthcare organizations are exploring partnerships with AI healthcare providers to guide strategic management.

The blog cites six ways to overcome these challenges and make AI adoption in the U.S. healthcare easy.

42% Reluctant, 84% Breaches: Shocking Stats on Why AI Adoption is Still Slow

- Deloitte’s 2023 consumer healthcare survey found that 21% of millennials consider the information provided by generative AI as less credible. And in 2024, this figure increased to 30%. Likewise, baby boomers expressed increasing distrust from 24% to 32% over the same period. Source: techtarget

- An Ernst & Young survey revealed that 83% of physicians were concerned with using AI for personalized medical plans or assisting with diagnoses. Source: ey.com/

- ChatGPT misdiagnosed 83 out of 100 pediatric medical cases. Source: psnet.ahrq

- 83% of U.S. consumers see the likelihood of AI committing mistakes as one of the major challenges for its adoption in healthcare. Source: medtronic

- 80% admit that a lack of basic knowledge and evidence about AI hinders adoption. Source: medtronic

- 89% of physicians want their vendors to be transparent about who created the content and how it was sourced. Source: wolterskluwer

- 42% of healthcare professionals in the U.S. are reluctant to use AI because of a lack of human interaction and data privacy concerns. Source: tebra

- 53% of Americans feel that AI is no match for human health experts, and 43% prefer human interaction and touch. Source: tebra

6 Ways to Simplify AI Adoption in U.S. Healthcare

AI adoption in the U.S. healthcare system is progressing slowly and steadily. But considering there’s a huge gap in demand and supply of healthcare needs, and that AI aids healthcare professionals in speeding up their processes, it’s high time some corrective measures are introduced that help simplify the process.

1. Know Your Organization’s Objective to Zero Down the Right AI Tools

Before diving headlong into the AI world, it’s crucial to understand what your organization is after. Put another way, what are your organization’s goals, and what issues do you intend to mitigate with the help of AI tools? Is it personalized patient care, diagnostic improvement, or operational efficiency enhanced?

A clear idea will help you narrow your AI adoption strategy. The AI software can help you achieve a meaningful impact, without which AI adoption could prove expensive and ineffective.

Say, for instance, a mid-sized hospital wants to reduce patients’ readmission rates, caused by inconsistent follow-ups and impersonalized treatment.

How AI fits in: The hospital can evaluate different AI software and choose a machine learning platform that scans data from electronic health records (EHRs), lab results, and genetic data to recommend tailored treatment plans for chronic disease patients. AI applications like IBM Watson Health or Tempus can analyze patient histories, genetic data, and clinical studies to recommend tailored treatments. The hospital witnessed a drop in readmission rates within 6 months and improved patient satisfaction scores.

Or, if your healthcare organization aims to reduce diagnostic errors, you should choose medical imaging or predictive analytics tools like those used in early cancer detection over general-purpose AI Platforms. As the AI ecosystem continually evolves, too many tools cloud its landscape. So, before zeroing in on a tool, you need to compare it against your healthcare organization's objectives to make AI integration smooth and economical.

2. Design a New Narrative about AI - As a Support System and Not a Ruthless Replacer

AI tools should be seen as complementary, helping humans with everyday tasks, not as competitors.

The more we consider AI a competition to humans, the more we delay its adoption. We cannot stop a technology whose time has come. Positioning AI as an assistive technology will pave the way for easier adoption.

For instance, AI in healthcare can support the patient-physician relationship by relieving them of mundane tasks—such as filling up information in the electronic health record (EHR)—thereby helping them spend their limited time and attention on patient care.

UCHealth, a reputed healthcare system in Colorado, leverages NLP- powered AI healthcare tools such as Nuance DAX to help doctors automatically generate medical notes from doctor-patient conversations.

Result: The physician-patient relationship improved as the physician found more time to connect with the patients. Cutting down on after-hours documentation reduced physician burnout.

3. Evaluate Outcomes Before Rolling Out AI Applications Publicly

Before using AI tools in healthcare, it’s crucial to evaluate their effectiveness just like we do with new diagnostic and therapeutic innovations because premature AI deployment can lead to misdiagnosis, legal issues, and harmful biases.

This makes it imperative for payers (insurance companies), health systems (hospitals and clinics), and providers (doctors and care providers) to vet it thoroughly and look for adverse side effects and how to mitigate them before being rolled out.

Physicians should carefully curate AI-driven diagnostic tools for biased data based on race and other characteristics. The organization should be able to customize the AI app based on clinicians' input.

Safe, equitable, and bias-free AI tools that could smoothly integrate into healthcare workflows.

4. Build a Strong, Secure, and Scalable Data Infrastructure

Data is the backbone of AI development. To make the most of data, healthcare organizations, including startups, should invest in developing a robust data infrastructure for collecting, storing, processing, and securing diverse healthcare data from electronic health records (EHRs), medical imaging, wearables, and more. They should also have clear data governance protocols to dictate how data is collected, managed, and accessed across the organization.

Considering 84% of healthcare organizations have witnessed significant security breaches in the last 12 months, a robust data infrastructure is crucial for protecting patient data, which is becoming increasingly vulnerable to cyberattacks.

In collaboration with Google Cloud, Mayo Clinic has built a secure data platform that hides patient data using differential privacy. The setup supports AI researchers and clinicians with seamless imaging, genomic, and clinical data integration, speeding up disease prediction and treatment optimization research.

Such infrastructure advancements, offered in partnership with machine learning providers for health systems, enabled Mayo Clinic to move towards faster AI model development, increasing diagnostic accuracy and enhancing patient data protection. The Mayo model has been an example for U.S. health systems pursuing AI innovation.

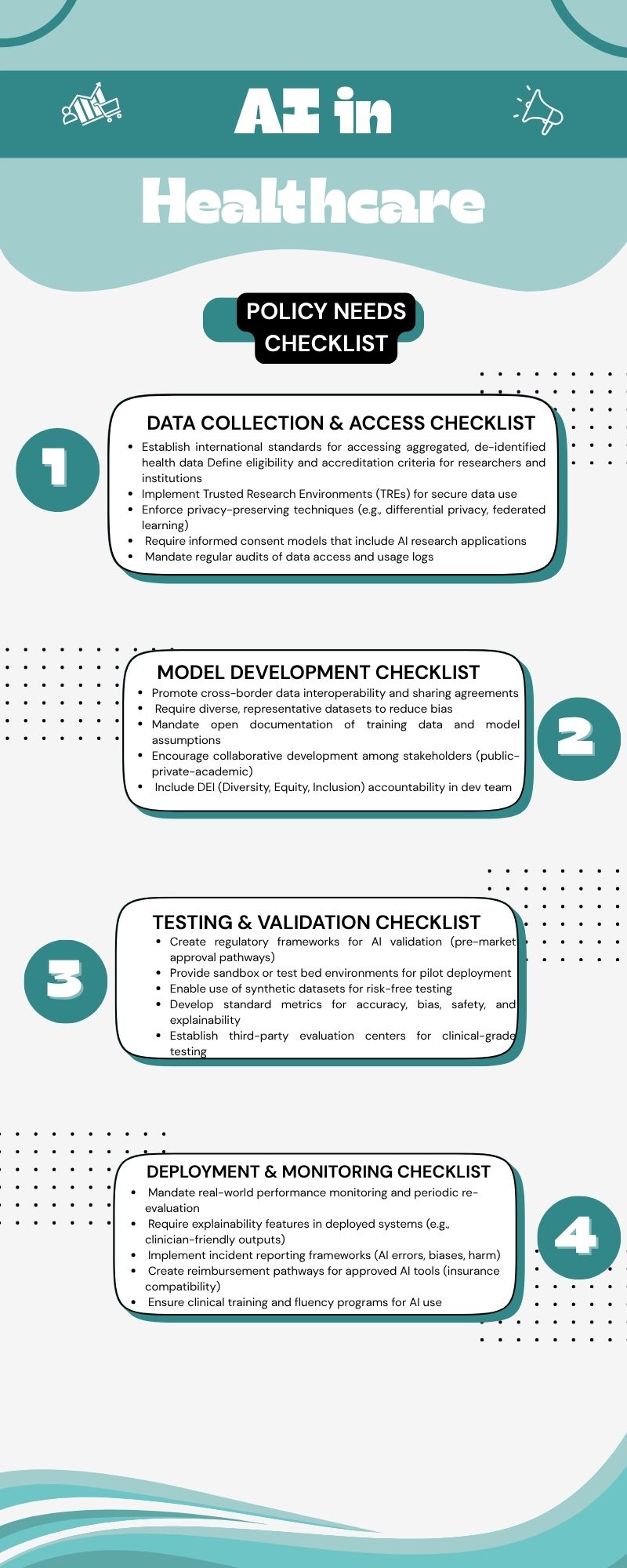

5. Implement Policies at Every Stage of the AI Lifecycle

AI-driven technologies can pose significant risks to patient safety. Therefore, safety policies should span the entire AI development lifecycle from data collection to deployment and monitoring.

The four stages of the AI lifecycle include 1) Data collection and access, 2) Model development, 3) Testing and Validation, and 4) Deployment and Monitoring.

AI can harm patients or fail to meet ethical and legal standards without policy coverage.

A few examples where policies are needed.

Data Access Standards at the Start

AI Researchers and developers need to access large, diverse, and high-quality datasets before the beginning of the AI lifecycle. To ensure secure and ethical data use, researchers and developers must have secure research environments such as Trusted Research Environments or TREs.

Also, the data stored should follow privacy-preserving methods, like differential privacy, that allow researchers to analyse data without knowing individual identities. For example, the UK’s Health Data Research Innovation Gateway, part of a TRE model, provides secure, privacy-respecting access to health datasets for approved researchers.

Launching international laws for Accreditation and Access to research environments

When you have international laws for accreditation and access to research environments, it would enable cross-border data sharing without compromising data security. For instance, linking global health data with air pollution data will help you understand how environmental factors impact health.

Validation and Evaluation Policies at the End of the Pipeline

At the final stages, it’s not just about building AI but testing it thoroughly and earning user trust. Some of the policies that need to be put in place to ensure accurate testing include validation frameworks that ensure models are correct and unbiased, access to testing, certifying tools, synthetic datasets for training and validation instead of using real patient data, and real test beds (e.g., used in hospitals) to evaluate performance in live settings.

Example: The FDA’s “Digital Health Software Precertification Program " ensures that the products undergo validation and real-world testing and pass through a streamlined approval process, speeding up deployment while ensuring safety.

A policy checklist will ensure reliable, validated AI tools that are safe, transparent, and compliant with regulations.

6. Build a Cross-Functional Team

To successfully integrate AI into your healthcare ecosystem, you must leverage diverse expertise; only a cross-functional team can help you. The AI development teams should comprise physicians, data scientists, software engineers, domain experts, and so on. Additionally, you must invest in training, and upskilling your workforce is crucial to ensure smooth collaboration with AI systems and accurate data interpretation.

Wrapping up

AI has immense potential to revolutionize American healthcare, be it diagnostics, personalized treatment, reducing physician burnout, or enhancing patient care. But successful AI adoption in healthcare isn’t about shiny new tools. It’s about building the right foundation: ensuring AI aligns with your healthcare goals, building secure data pipelines, designing patient-centric policies, and creating cross-functional teams. When these foundations are established, AI can revolutionize the U.S. healthcare system with precision, personalization, and equity in care delivery.

Looking to integrate AI healthcare tools in your organization? Check out Goodfirms' curated list of top AI Companies in the U.S. offering secure and scalable AI solutions.