Shahin Baharan, Growth Hacker at SB Growth AB

Posted on May 22, 2024

Delivered exceptional design and development for our app, making the process smooth and efficient.

We initially had a web application and just an idea of how we wanted our app to look. Eleken's talented designers stepped in, and one of them even took the initiative to create wireframes during our initial screening process. These wireframes turned out great, and we decided to work with them. I honestly have the highest recommendation for Eleken. Their expertise in React Native, which is hard to find these days, was a major plus.

The collaboration between their design team and development team was excellent. Communication was very clear, and they were incredibly responsive, even at odd hours when I didn't expect a reply. We had a dedicated project leader who truly loved working on our project. Communication through Slack and the structured feedback loops were top-notch. Overall, I only have the absolute highest ratings to give to Alec on.

The collaboration between their design team and development team was excellent. Communication was very clear, and they were incredibly responsive, even at odd hours when I didn't expect a reply. We had a dedicated project leader who truly loved working on our project. Communication through Slack and the structured feedback loops were top-notch. Overall, I only have the absolute highest ratings to give to Alec on.

What was the project name that you have worked with Eleken?

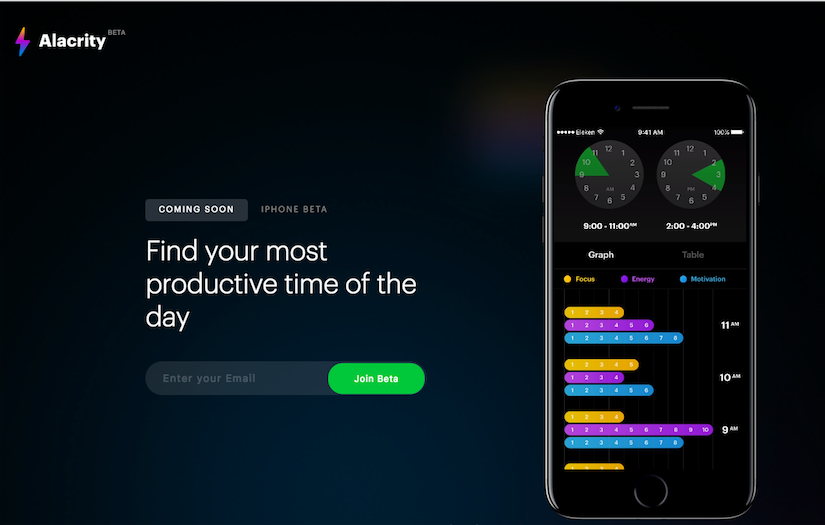

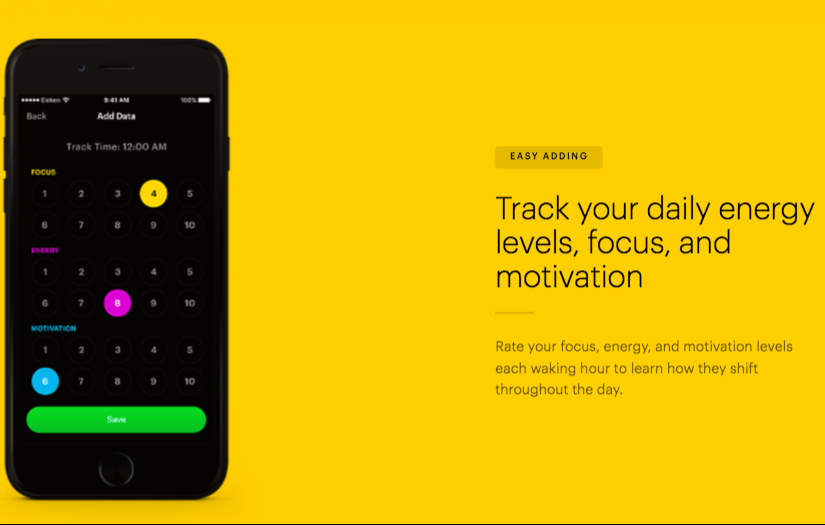

App development & Designing.

What service was provided as part of the project?

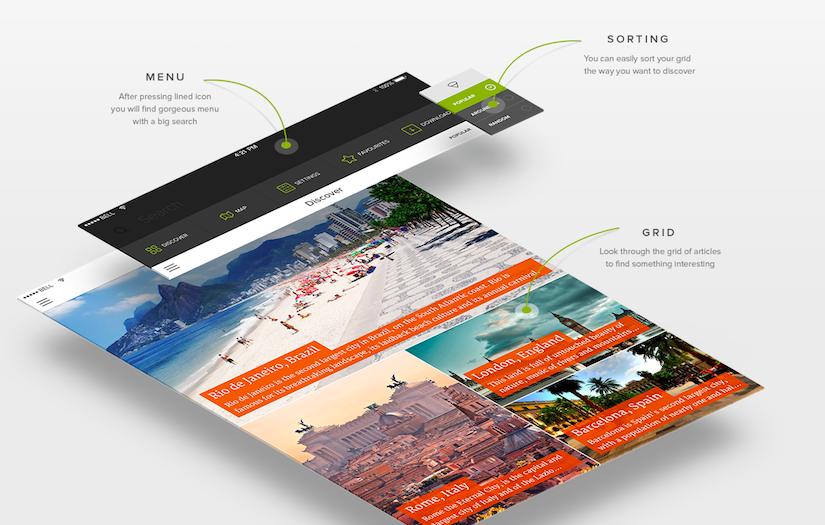

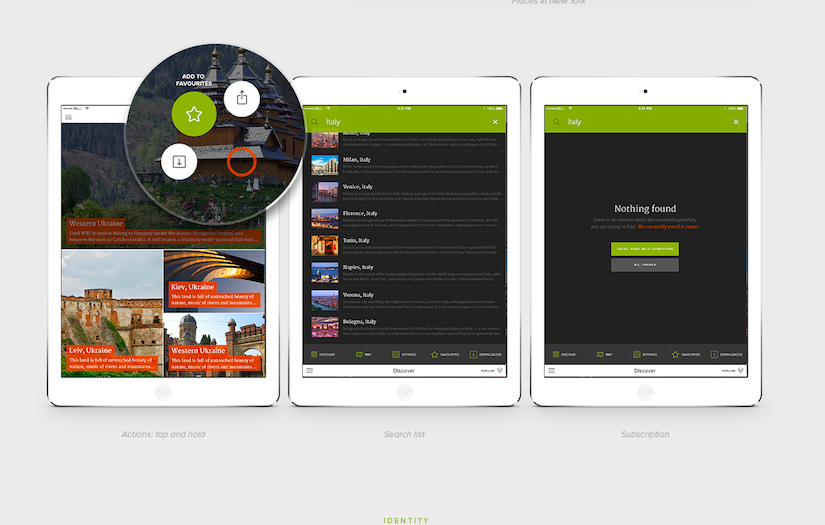

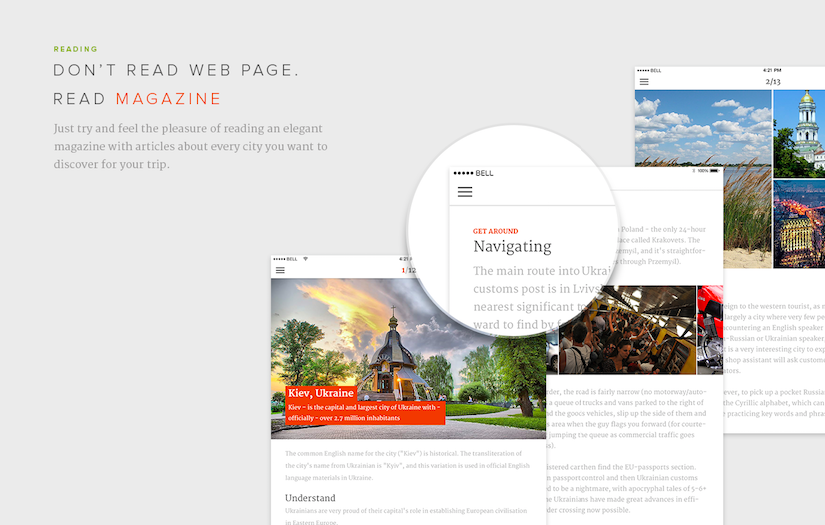

Mobile App Development, App Designing (UI/UX)

Describe your project in brief

Eleken helped us in developing an app called My Toddler. My Toddler is a video streaming service for children and parents. We got a recommendation from a good friend who had a great experience with Eleken, particularly due to their expertise in React Native.

What is it about the company that you appreciate the most?

What I appreciate most about Eleken is their exceptional collaboration and communication. Their team was always responsive and clear, making the entire process smooth. The dedication of their project leader and the structured feedback loops were outstanding. Plus, their expertise in React Native is rare and highly valuable.

What was it about the company that you didn't like which they should do better?

There was nothing I felt they could improve on and I've worked with them for months.

Rating Breakdown

- Quality

- Schedule & Timing

- Communication

- Overall Rating

Project Detail

- $0 to $10000

- In Progress

- Other Industries